Linear takes $4.2M led by Sequoia to build a better bug tracker and more

Software will eat the world, as the saying goes, but in doing so, some developers are likely to get a little indigestion. That is to say, building products requires working with disparate and distributed teams, and while developers may have an ever-growing array of algorithms, APIs and technology at their disposal to do this, ironically the platforms to track it all haven’t evolved with the times. Now three developers have taken their own experience of that disconnect to create a new kind of platform, Linear, which they believe addresses the needs of software developers better by being faster and more intuitive. It’s bug tracking you actually want to use.

Today, Linear is announcing a seed round of $4.2 million led by Sequoia, with participation also from Index Ventures and a number of investors, startup founders and others that will also advise Linear as it grows. They include Dylan Field (Founder and CEO, Figma), Emily Choi (COO, Coinbase), Charlie Cheever (Co-Founder of Expo & Quora), Gustaf Alströmer (Partner, Y Combinator), Tikhon Berstram (Co-Founder, Parse), Larry Gadea (CEO, Envoy), Jude Gomila (CEO, Golden), James Smith (CEO, Bugsnag), Fred Stevens-Smith (CEO, Rainforest), Bobby Goodlatte, Marc McGabe, Julia DeWahl and others.

Cofounders Karri Saarinen, Tuomas Artman, and Jori Lallo — all Finnish but now based in the Bay Area — know something first-hand about software development and the trials and tribulations of working with disparate and distributed teams. Saarinen was previously the principal designer of Airbnb, as well as the first designer of Coinbase; Artman had been staff engineer and architect at Uber; and Lallo also had been at Coinbase as a senior engineer building its API and front end.

“When we worked at many startups and growth companies we felt that the tools weren’t matching the way we’re thinking or operating,” Saarinen said in an email interview. “It also seemed that no-one had took a fresh look at this as a design problem. We believe there is a much better, modern workflow waiting to be discovered. We believe creators should focus on the work they create, not tracking or reporting what they are doing. Managers should spend their time prioritizing and giving direction, not bugging their teams for updates. Running the process shouldn’t sap your team’s energy and come in the way of creating.”

Linear cofounders (from left): KarriSaarinen, Jori Lallo, and Tuomas Artma

All of that translates to, first and foremost, speed and a platform whose main purpose is to help you work faster. “While some say speed is not really a feature, we believe it’s the core foundation for tools you use daily,” Saarinen noted.

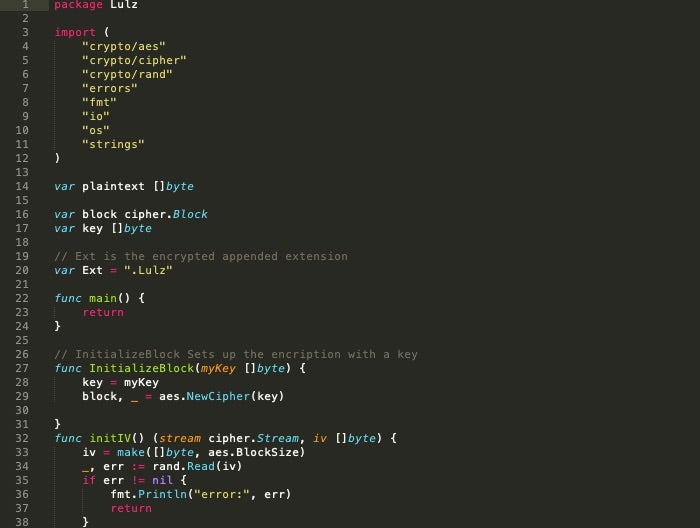

A ⌘K command calls up a menu of shortcuts to edit an issue’s status, assign a task, and more so that everything can be handled with keyboard shortcuts. Pages load quickly and synchronise in real time (and search updates alongside that). Users can work offline if they need to. And of course there is also a dark mode for night owls.

The platform is still very much in its early stages. It currently has three integrations based on some of the most common tools used by developers — GitHub (where you can link Pull Requests and close Linear issues on merge), Figma designs (where you can get image previews and embeds of Figma designs), and Slack (you can create issues from Slack and then get notifications on updates). There are plans to add more over time.

“We started solving the problem from the end-user perspective, the contributor, like an engineer or a designer and starting to address things that are important for them, can help them and their teams,” Saarinen said. “We aim to also bring clarity for the teams by making the concepts simple, clear but powerful. For example, instead of talking about epics, we have Projects that help track larger feature work or tracks of work.”

Indeed, speed is not the only aim with Linear. Saarinen also said another area they hope to address is general work practices, with a take that seems to echo a turn away from time spent on manual management and more focus on automating that process.

“Right now at many companies you have to manually move things around, schedule sprints, and all kinds of other minor things,” he said. “We think that next generation tools should have built in automated workflows that help teams and companies operate much more effectively. Teams shouldn’t spend a third or more of their time a week just for running the process.”

The last objective Linear is hoping to tackle is one that we’re often sorely lacking in the wider world, too: context.

“Companies are setting their high-level goals, roadmaps and teams work on projects,” he said. “Often leadership doesn’t have good visibility into what is actually happening and how projects are tracking. Teams and contributors don’t always have the context or understanding of why they are working on the things, since you cannot follow the chain from your task to the company goal. We think that there are ways to build Linear to be a real-time picture of what is happening in the company when it comes to building products, and give the necessary context to everyone.”

Linear is a late entrant in a world filled with collaboration apps, and specifically workflow and collaboration apps targeting the developer community. These include not just Slack and GitHub, but Atlassian’s Trello and Jira, as well as Asana, Basecamp and many more.

Saarinen would not be drawn out on which of these (or others) that it sees as direct competition, noting that none are addressing developer issues of speed, ease of use and context as well as Linear is.

“There are many tools in the market and many companies are talking about making ‘work better,’” he said. “And while there are many issue tracking and project management tools, they are not supporting the workflow of the individual and team. A lot of the value these tools sell is around tracking work that happens, not actually helping people to be more effective. Since our focus is on the individual contributor and intelligent integration with their workflow, we can support them better and as a side effect makes the information in the system more up to date.”

Stephanie Zhan, the partner at Sequoia whose speciality is seed and Series A investments and who has led this round, said that Linear first came on her radar when it first launched its private beta (it’s still in private beta and has been running a waitlist to bring on new users. In that time it’s picked up hundreds of companies, including Pitch, Render, Albert, Curology, Spoke, Compound and YC startups including Middesk, Catch and Visly). The company had also been flagged by one of Sequoia’s Scouts, who invested earlier this year

Although Linear is based out of San Francisco, it’s interesting that the three founders’ roots are in Finland (with Saarinen in Helsinki this week to speak at the Slush event), and brings up an emerging trend of Silicon Valley VCs looking at founders from further afield than just their own back yard.

“The interesting thing about Linear is that as they’re building a software company around the future of work, they’re also building a remote and distributed team themselves,” Zahn said. The company currently has only four employees.

In that vein, we (and others, it seems) had heard that Sequoia — which today invests in several Europe-based startups, including Tessian, Graphcore, Klarna, Tourlane, Evervault and CEGX — has been considering establishing a more permanent presence in this part of the world, specifically in London.

Sources familiar with the firm, however, tell us that while it has been sounding out VCs at other firms, saying a London office is on the horizon might be premature, as there are as yet no plans to set up shop here. However, with more companies and European founders entering its portfolio, and as more conversations with VCs turn into decisions to make the leap to help Sequoia source more startups, we could see this strategy turning around quickly.

![]()