macOS Catalina | The Big Upgrade, Don’t Get Caught Out!

Tuesday saw Apple drop the first public release of macOS Catalina, a move which has caught out a number of developers, including some offering security solutions, as well as organizations and ordinary macOS users. While SentinelOne is already Catalina-compatible (more details below), Apple’s unannounced release date has left some scrambling to catch up as macOS 10.15 introduces some major changes under the hood, undoubtedly the biggest we’ve seen in some time. Anyone considering a Catalina upgrade should be aware of how these changes could affect current enterprise workflows, whether further updates for dependency code are required and are available, and whether the new version of macOS is going to necessitate a shift to new software or working practices. In this post, we cover the major changes and challenges that Catalina brings to enterprise macOS fleets.

Does SentinelOne Work With macOS Catalina?

First things first: Yes, it does. SentinelOne macOS Agent version 3.2.1.2800 was rolled out on the same day that Apple released macOS 10.15 Catalina. This Agent is supported with Management Consoles Grand Canyon & Houston. Ideally, you should update your SentinelOne Agent version before updating to Catalina to ensure the smoothest upgrade flow.

Developers Play Catalina Catch-up

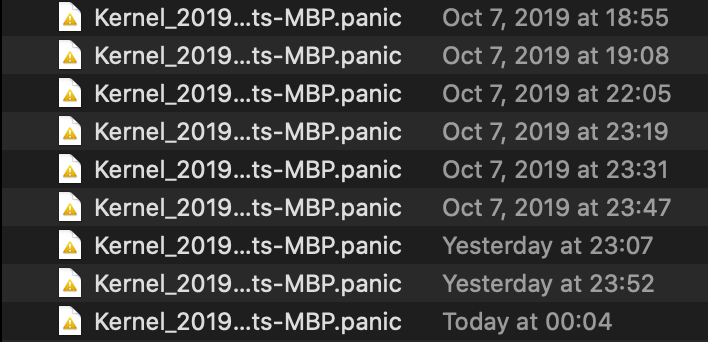

Contrary to popular (mis)belief, kexts or kernel extensions are still alive and well in Catalina, and the move to a new “kextless” future with Apple’s SystemExtensions framework remains optional at least for the time being. However, that doesn’t mean your current array of kernel extensions from other developers are necessarily going to be unproblematic during an upgrade.

New rules for kexts mean developers at a minimum have to notarize them, and users will have to restart the Mac after approving them. On top of that, developers – particularly those distributing security software – will need to update their kexts and solutions to be compatible with Catalina’s new TCC and user privacy rules, changes in partition architecture and discontinued support for 32-bit apps (see below), among other things.

Upgrading a Mac to 10.15 with incompatible kexts already installed could lead to one or more kernel panics.

The safest bet is to contact vendors to check on their Catalina support before you pull the trigger on the Catalina upgrade. If for some reason that’s not possible or you have legacy kexts installed which are out of support, the best advice is to remove those before you upgrade a test machine, then immediately test for compatibility as part of your post-install routine.

Bye Bye, 32-Bit Applications

Apple called time on 32-bit applications several releases ago, offering increasingly urgent warnings of their impending doom through High Sierra and Mojave. However, in macOS Mojave these would still run after users dismissed the one-time warning alert, but Catalina finally drops the axe on 32-bit applications.

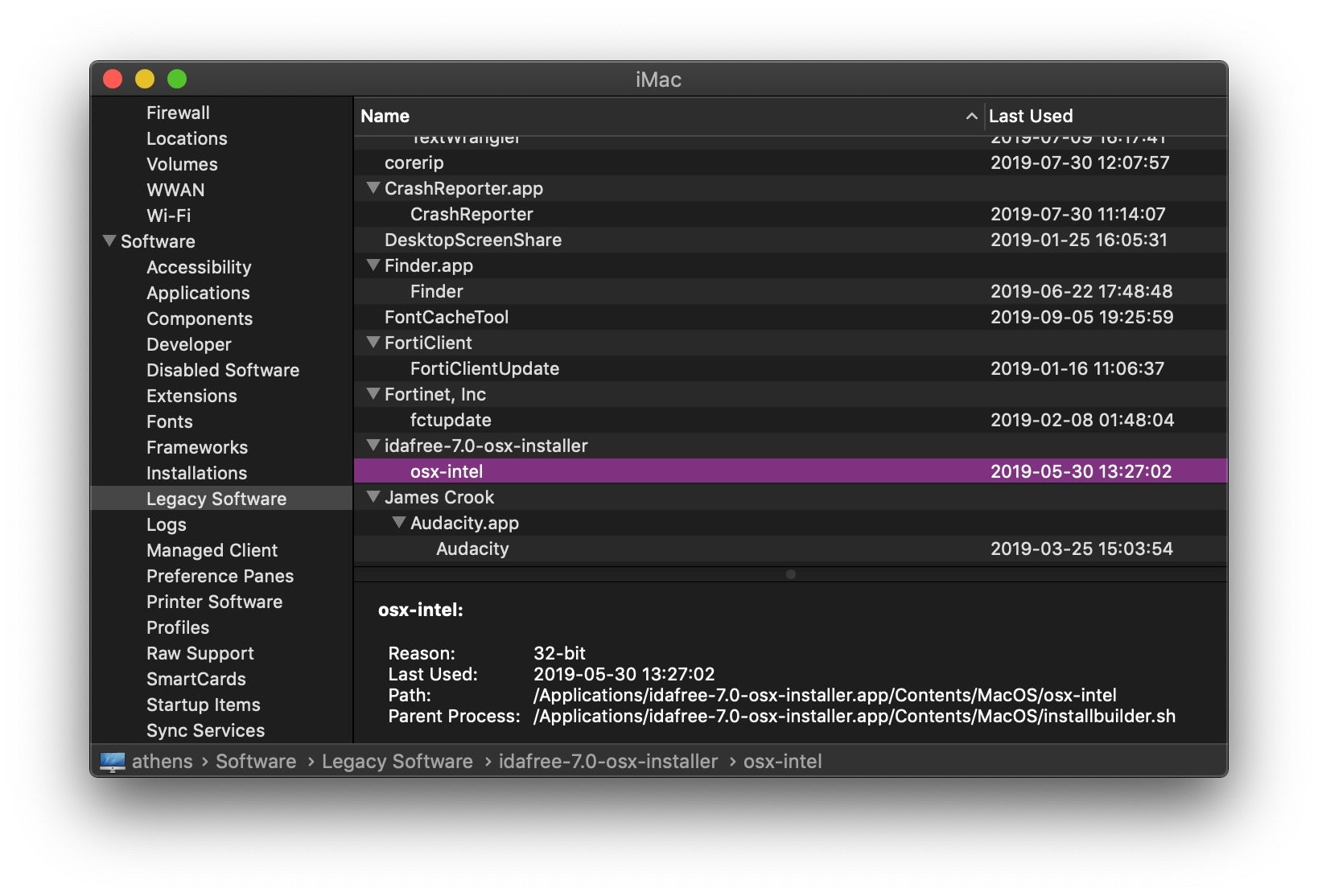

Before upgrading, check what legacy applications you have installed. From the command line, you can output a report with:

system_profiler SPLegacySoftwareDataType

For GUI users, you can take a trip to Apple > About This Mac and click the System Report… button.

Scroll down the sidebar to “Legacy Apps” and click on it. Here you’ll see a list of all the apps that won’t run on Catalina. macOS 10.15 itself will also list any legacy apps during the upgrade process, but it’s wise to be prepared before you get that far.

VPP & Apple School/Business Manager Support

Catalina continues to allow various enterprise upgrade paths through its Mobile Device Management (MDM) framework, Device Enrollment Program (DEP) and Apple Configurator. For organizations enrolled in Apple’s Volume Purchase Program or with Apple Business Manager or Apple School Manager licensing, Catalina is supported right out of the door, saving you the bother of having to manually download, package and then install multiple instances of 10.15.

New in Catalina are Managed Apple IDs for Business, which attempt to separate the user’s work identity from their personal identity, allowing them to use separate accounts for things like iCloud Notes, iCloud Drive, Mail, Contacts and other services.

There is a plus here for user privacy, but for admins used to having total control over managed endpoints, be aware that a device with an enrollment profile and managed Apple ID means the business loses power over things like remote wipe and access to certain user data. Effectively, the device is separated in to “personal” and “managed” (i.e., business use), with a separate APFS volume for the managed accounts, apps and data.

Privacy Controls Reach New Heights

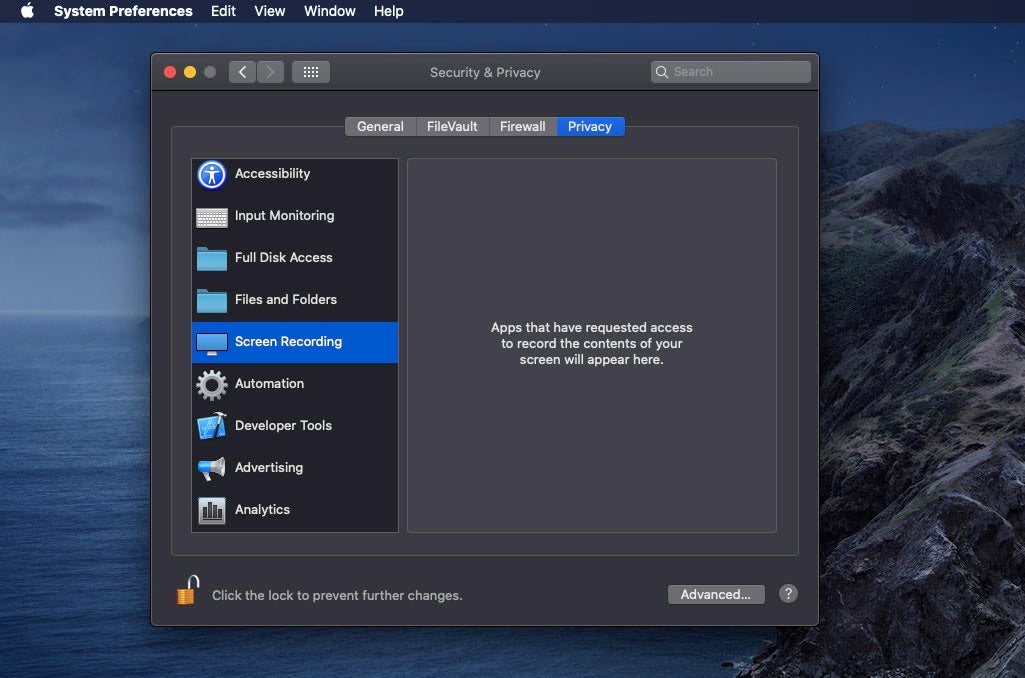

That’s not the only thing to be aware of with regards user data. The biggest change that end users are going to notice as they get to work on a newly upgraded macOS 10.15 Catalina install is Apple’s extended privacy control policies, which will manifest themselves in a number of ways.

In the earlier, macOS 10.14 Mojave, there are 12 items listed in the Privacy tab of the Security & Privacy pane in System Preferences. Catalina adds five more, with Speech Recognition, Input Monitoring, Files and Folders, Screen Recording, and Developer Tools added in the new version of macOS.

Here’s what the first three control:

Speech Recognition: Apps that have requested access to speech recognition. Input Monitoring: Apps that have requested access to monitor input from your keyboard. Screen Recording: Apps that have requested access to record the contents of the screen.

Importantly, the three items above can only be allowed at the specific time when applications try to touch any of these services. Although applications can be pre-denied by MDM provisioning and configuration profiles, they cannot be pre-allowed. That has important implications for your workflows since any software in the enterprise that requires these permissions must obtain user approval in the UI in order to function correctly, or indeed at all. Be aware that Catalina’s implementation of Transparency, Consent and Control is not particularly forthcoming with feedback. Applications may simply silently fail when permission is denied.

The most obvious, but certainly not only, place where privacy controls are going to cause issue is with video meeting/conferencing software like Zoom, Skype and similar. Prompts from the OS that suggest applications must be restarted after permission has been granted for certain services like Screen Recording have raised fears that clicking ‘Allow’ during a meeting might kick users out of the conference while the app re-launches. Conversely, users who inadvertently click ‘Don’t Allow’ may wonder why later attempts to use the software continue to fail.

What all this means is that with macOS Catalina, there is a greater onus on sysadmins to engage in user education to preempt these kinds of issues before they arise. Thoroughly test how the apps you rely on are going to behave and what workflow users need to follow to ensure minimal interruption to their daily activities.

The remaining two additional items are:

Files and Folders: Allow the apps in the list to access files and folders. Developer Tools: Allow the apps in the list to run software locally that does not meet the system's security policy.

These last two can both be pre-approved. The first grants access to user files in places like Desktop, Downloads, and Documents folders. The second allows developers to run their own software that isn’t yet notarized, signed or ready to be distributed (and thus subject to macOS’s full system policy).

And New Lows…

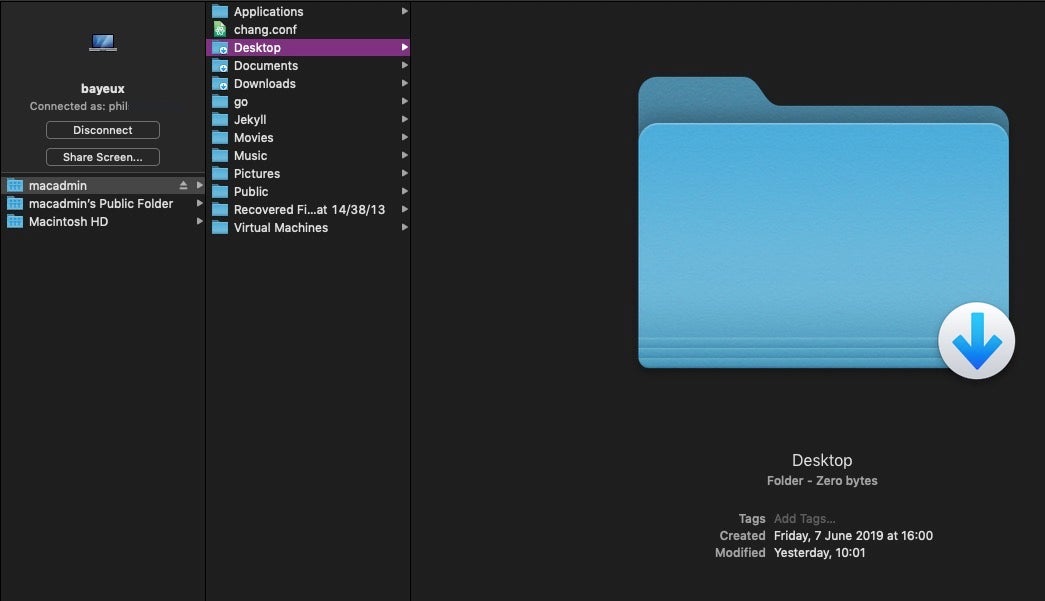

Here’s a good example of what all this might mean in practice. Let’s take as destination a user’s machine on which File Sharing, Remote Management (which allows Screen Sharing) and Remote Login (for SSH) have been enabled.

Suppose, as admin, I choose to both Screen Share and File Share from my source machine into this user’s computer. These two different services only require the same credentials – user name and password for a registered user on the destination device – to be entered a single time per session to simultaneously enable both services, but they have confusingly different restrictions.

Trying to navigate to the destination’s Desktop folder via File Sharing in the Finder from the source indicates that the user’s Desktop folder is empty rather than inaccessible.

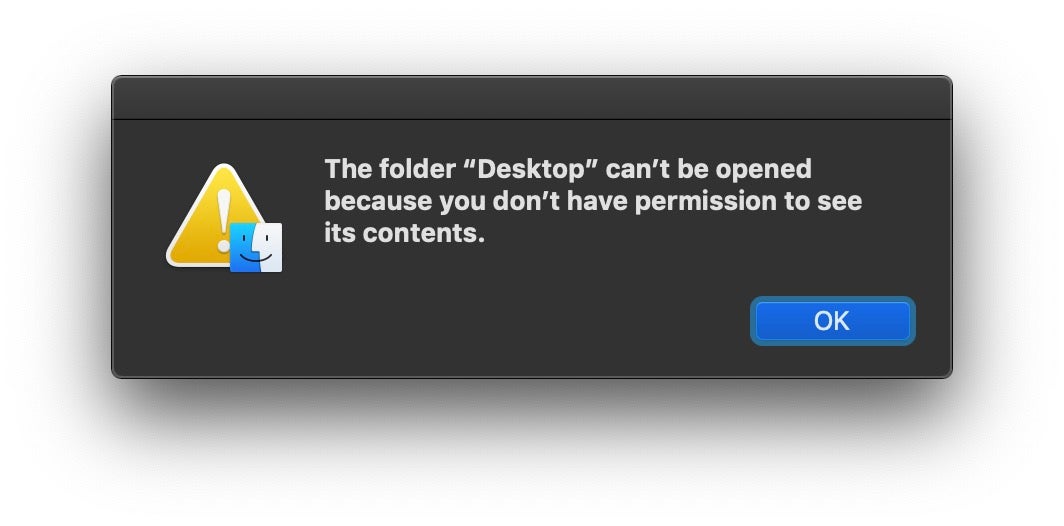

If I persist in trying to access any of these protected folders, the misleading Finder display is eventually replaced with a permission denied alert.

While Screen Sharing in the same session, however, I can see the Desktop folder’s contents without a problem; in fact, in this case it contains 17 items. Indeed, via Screen Sharing, I can move these items from the Desktop folder to any other folder that is accessible through File Sharing, such as the ~/Public folder. That, in a roundabout and inconvenient way, means I can get past the permission denial thrown above. Further, because I can enable other services in the Privacy pane from my Screen Sharing session, such as Full Disk Access, I can also use those to grant myself SSH access, with which I am similarly also able to work around the File Share permission denied problem.

This kind of inconsistency and complexity is unfortunate. Aside from making legitimate users jump through these hoops for no security pay-off, it raises this question: what does a legitimate user need to do to make File Sharing work properly? It seems we should go to the Files and Folders pane in System preferences and add the required process. But what process needs to be added? There’s simply no help here for those trying to figure out how to manage Apple’s user privacy controls. As it turns out, there also appears to be a bug in the UI that prevents anything at all being added to Files and Folders, so at the moment we can’t answer that question for you either.

Catalina’s Vista of Alerts: Cancel or Allow?

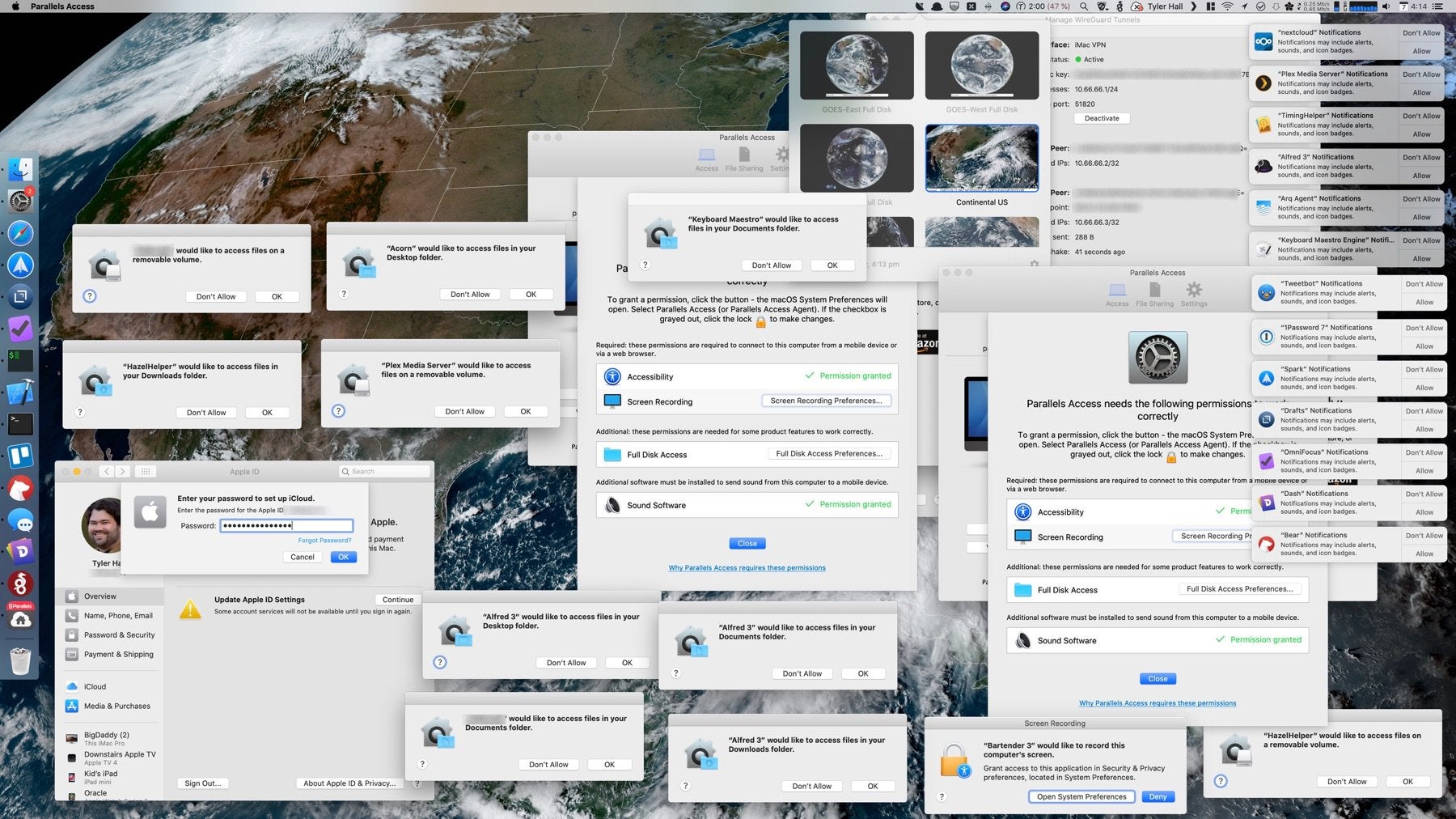

This expansion of user privacy controls has one very significant and obvious consequence for everyone using macOS 10.15 Catalina, graphically portrayed in this tweet by Tyler Hall.

The spectacle of numerous alerts has made some liken Apple’s investment in user privacy through consent to Microsoft’s much-maligned Windows Vista release, which had a similarly poor reputation for irritating users with an array of constant popups and dialogs, many of which seemed quite unnecessary.

Yes, your macOS users are going to be hit by a plethora of authorization requests, alerts and notifications. While Tyler Hall’s image was undoubtedly designed to illustrate the effect in dramatic fashion, there’s no doubt that Catalina’s insistence on popping alerts is going to cause a certain amount of irritation among many users after they upgrade, and who then try getting down to some work only to be interrupted multiple times. However, if the trade-off for a bit of disruption to workflows is improved security, then that’s surely not such a bad thing?

The question is whether security is improved in this way or not. Experience has taught malware authors that users are easily manipulated, a well-recognized phenomenon that led to the coining of the phrase “social engineering” and the prevalence of phishing and spearphishing attacks as the key to business compromise.

On the one hand, some will feel that these kinds of alerts and notifications help educate users about what applications are doing – or attempting to do – behind the scenes, and user education is always a net positive in terms of security.

On the other hand, the reality is that most users are simply trying to use a device to get work done. Outside of admins, IT and security folk, the overwhelming majority of users have no interest in how devices work or what applications are doing, as much as we ‘tech people’ would like it to be otherwise. What users want is to be productive, and they expect technology and policy to ensure that they are productive in a safe environment rather than harangued by lots of operating system noise.

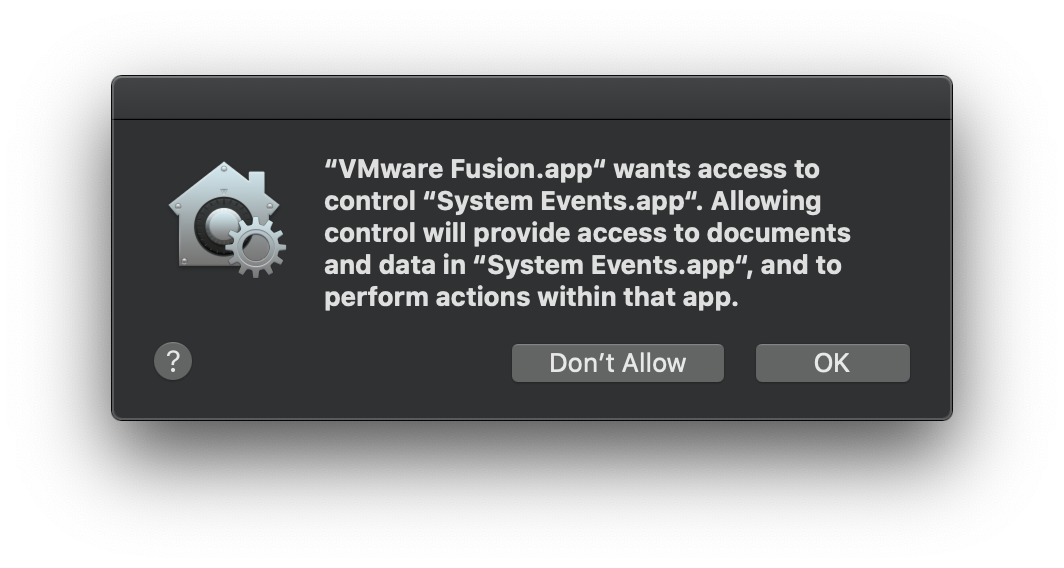

The alert shown above illustrates the point. How informative would that really be to most users, who are unlikely to have even heard of System Events.app or understand the consequences adumbrated in the message text?

Critically, consent dialogs rely on the user making an immediate decision about security for which they are not sufficiently informed, at a time when it’s not convenient, and by an “actor” – the application that’s driving the alert and whose developer writes the alert message text – whose interests lie in the user choosing to allow.

As the user has opened the application with the intent to do something productive, their own interests lie in responding quickly and taking the path that will cause least further interruption. In that context, it seems that users are overwhelmingly likely to choose to allow the request regardless of whether that’s the most secure thing to do or not.

The urgency of time, the paucity of information and the combined interests of the user and the developer to get the app up and running conspire to make these kinds of controls a poor choice for a security mechanism. We talk a lot about “defense in depth”, but when a certain layer of that security posture relies on annoying users with numerous alerts, it could be argued that technology is failing the user. Security needs to be handled in a better way that leaves users to get on with their work and lets automated security solutions take care of the slog of deciding what’s malicious and what’s not.

Conclusion

If you are an enterprise invested in a Mac fleet, then upgrading to Catalina is a question of “when” rather than “if”. Given the massive changes presented by Catalina – from dropping support for 32-bit apps and compatibility issues with existing kernel extensions to new restrictions on critical business software like meeting apps and user consent alerts – there’s no doubt that that’s a decision not to be rushed into. Test your workflows, look at your current dependencies and roll out your upgrades with caution.

Like this article? Follow us on LinkedIn, Twitter, YouTube or Facebook to see the content we post.

Read more about Cyber Security

- You Thought Ransomware Was Declining? Think Again!

- What is Deepfake? (And Should You Be Worried?)

- Checkm8: 5 Things You Should Know About the New iOS Boot ROM Exploit

- Info Stealers | How Malware Hacks Private User Data

- Detecting macOS.GMERA Malware Through Behavioral Inspection

- Trickbot Update: Brief Analysis of a Recent Trickbot Payload

Happily, only about 15 percent of the bugs patched this week earned Microsoft’s most dire “critical” rating. Microsoft labels flaws critical when they could be exploited by miscreants or malware to seize control over a vulnerable system without any help from the user.

Happily, only about 15 percent of the bugs patched this week earned Microsoft’s most dire “critical” rating. Microsoft labels flaws critical when they could be exploited by miscreants or malware to seize control over a vulnerable system without any help from the user.